- I am grateful to those who offered me kindness or assistance in the course of my research: Angie Abbatecola; Barbara Goto; Nick Hopper; Lena Lau-Stewart; Heather Levien; Gordon Lyon; Deirdre Mulligan; Audrey Sillers; David Wagner; Philipp Winter, whose CensorBib is an invaluable resource; the Tor Project and the tor-dev and tor-qa mailing lists; OONI; the traffic-obf mailing list; the Open Technology Fund and the Freedom2Connect Foundation; and the SecML, BLUES, and censorship research groups at UC Berkeley. Thank you.The opinions expressed herein are solely those of the author and do not necessarily represent any other person or organization.

- Availability

- Source code and information related to this document are available at https://www.bamsoftware.com/papers/thesis

Chapter 1

Introduction

This is a thesis about Internet censorship. Specifically, it is about two threads of research that have occupied my attention for the past several years: gaining a better understanding of how censors work, and fielding systems that circumvent their restrictions. These two topics drive each other: better understanding leads to better circumvention systems that take into account censors’ strengths and weaknesses; and the deployment of circumvention systems affords an opportunity to observe how censors react to changing circumstances. The output of my research takes two forms: models that describe how censors behave today and how they may evolve in the future, and tools for circumvention that are sound in theory and effective in practice.

1.1 Scope

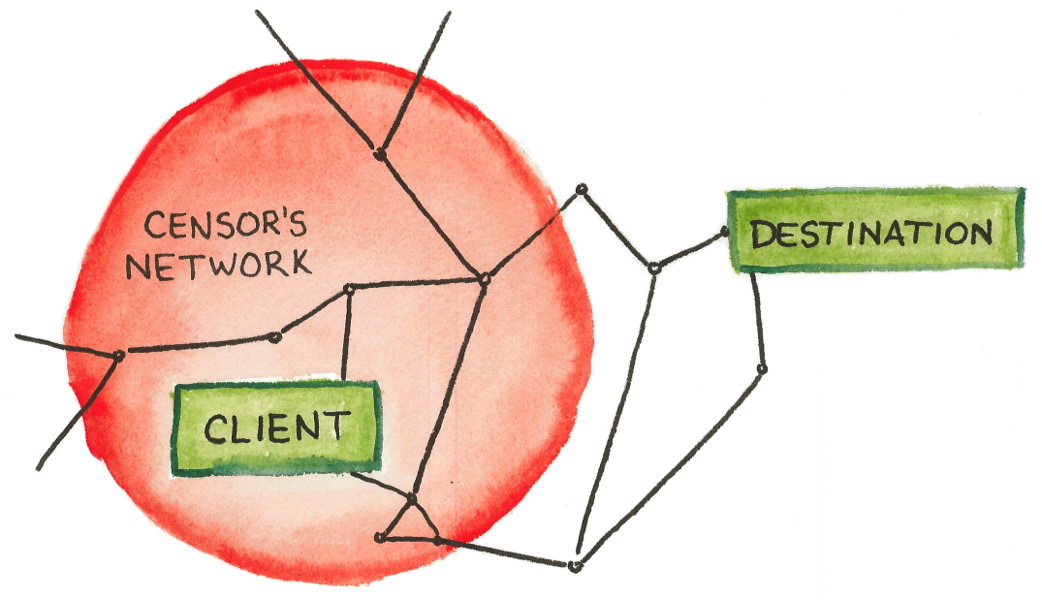

Censorship is a big topic, and even adding the “Internet” qualifier makes it hardly less so. In order to deal with the subject in detail, I must limit the scope. The subject of this work is an important special case of censorship, which I call the “border firewall.” See Figure 1.1.

A client resides within a network that is entirely controlled by a censor. Within the controlled network, the censor may observe, modify, inject, or block any communication along any link. The client’s computer, however, is trustworthy and not controlled by the censor. The censor tries to prevent some subset of the client’s communication with the wider Internet, for instance by blocking those that discuss certain topics, that are destined to certain network addresses, or that use certain protocols. The client’s goal is to evade the censor’s controls and communicate with some destination that lies outside the censor’s network; successfully doing so is called circumvention. Circumvention means somehow safely traversing a hostile network, eluding detection and blocking. The censor does not control the network outside its border; it may send messages to the outside world, but it cannot control them after they have traversed the border.

This abstract model is a good starting point, but it is not the whole story. We will have to adapt it to fit different situations, sometimes relaxing and sometimes strengthening assumptions. For example, the censor may be weaker than assumed: it may observe only the links that cross the border, not those that lie wholly inside; it may not be able to fully inspect every packet; or there may be deficiencies or dysfunctions in its detection capabilities. Or the censor may be stronger: while not fully controlling outside networks, it may perhaps exert outside influence to discourage network operators from assisting in circumvention. The client may be limited, for technical or social reasons, in the software and hardware they can use. The destination may knowingly cooperate with the client’s circumvention effort, or may not. There are many possible complications, reflecting the messiness and diversity of dealing with real censors. Adjusting the basic model to reflect real-world actors’ motivations and capabilities is the heart of threat modeling. In particular, what makes circumvention possible at all is the censor’s motivation to block only some, but not all, of the incoming and outgoing communications—this assumption will be a major focus of the next chapter.

It is not hard to see how the border firewall model relates to censorship in practice. In a common case, the censor is the government of a country, and the limits of its controlled network correspond to the country’s borders. A government typically has the power to enforce laws and control network infrastructure inside its borders, but not outside. However this is not the only case: the boundaries of censorship do not always correspond to the border of a country. Content restrictions may vary across geographic locations, even within the same country—Wright et al. [202] identified some reasons why this might be. A good model for some places is not a single unified regime, but rather several autonomous service providers, each controlling and censoring its own portion of the network, perhaps coordinating with others about what to block and perhaps not. Another important case is that of a university or corporate network, in which the only outside network access is through a single gateway router, which tries to enforce a policy on what is acceptable and what is not. These smaller networks often differ from national- or ISP-level networks in interesting ways, for instance with regard to the amount of overblocking they are willing to tolerate, or the amount of computation they can afford to spend on each communication.

Here are examples of forms of censorship that are in scope:

- blocking IP addresses

- blocking specific network protocols

- blocking DNS resolution for certain domains

- blocking keywords in URLs

- parsing application-layer data (“deep packet inspection”)

- statistical and probabilistic traffic classification

- bandwidth throttling

- active scanning to discover the use of circumvention

Some other censorship-related topics that are not in scope include:

- domain takedowns (affecting all clients globally)

- server-side blocking (servers that refuse to serve certain clients)

- forum moderation and deletion of social media posts

- anything that takes place entirely within the censor’s network and does not cross the border

- deletion-resistant publishing in the vein of the Eternity Service [10] (what Köpsell and Hillig call “censorship resistant publishing systems” [120 §1]), except insofar as access to such services may be blocked

Parts of the abstract model are deliberately left unspecified, to allow for the many variations that arise in practice. The precise nature of “blocking” can take many forms, from packet dropping, to injection of false responses, to softer forms of disruption such as bandwidth throttling. Detection does not have to be purely passive. The censor may to do work outside the context of a single connection; for example, it may compute aggregate statistics over many connections, make lists of suspected IP addresses, and defer some analysis for offline processing. The client may cooperate with other parties inside and outside the censor’s network, and indeed almost all circumvention will require the assistance of a collaborator on the outside.

It is a fair criticism that the term “Internet censorship” in the title overreaches, given that I am talking only about one specific manifestation of censorship, albeit an important one. I am sympathetic to this view, and I acknowledge that far more topics could fit under the umbrella of Internet censorship. Nevertheless, for consistency and ease of exposition, in this document I will continue to use “Internet censorship” without further qualification to mean the border firewall case.

1.2 My background

This document describes my research experience from the past five years. The next chapter, “Principles of circumvention,” is the thesis of the thesis, in which I lay out opinionated general principles of the field of circumvention. The remaining chapters are split between the topics of modeling and circumvention.

One’s point of view is colored by experience. I will therefore briefly describe the background to my research. I owe much of my experience to collaboration with the Tor Project, producers of the Tor anonymity network. whose anonymity network has been the vehicle for deployment of my circumvention systems. Although Tor was not originally intended as a circumvention system, it has grown into one thanks to pluggable transports, a modularization system for circumvention implementations. I know a lot about Tor and pluggable transports, but I have less experience (especially implementation experience) with other systems, particularly those that are developed in languages other than English. And while I have plenty of operational experience—deploying and maintaining systems with real users—I have not been in a situation where I needed to circumvent regularly, as a user.

Chapter 2

Principles of circumvention

In order to understand the challenges of circumvention, it helps to put yourself in the mindset of a censor. A censor has two high-level functions: detection and blocking. Detection is a classification problem: the censor prefers to permit some communications and deny others, and so it must have some procedure for deciding which communications fall in which category. Blocking follows detection. Once the censor detects some prohibited communication, it must take some action to stop the communication, such as terminating the connection at a network router. Censorship requires both detection and blocking. (Detection without blocking would be called surveillance, not censorship.) The flip side of this statement is that circumvention has two ways to succeed: by eluding detection, or, once detected, by somehow resisting the censor’s blocking action.

A censor is, then, essentially a traffic classifier coupled with a blocking mechanism. Though the design space is large, and many complications are possible, at its heart a censor must decide, for each communication, whether to block or allow, and then effect blocks as appropriate. Like any classifier, a censor is liable to make mistakes. When the censor fails to block something that it would have preferred to block, it is an error called a false negative; when the censor accidentally blocks something that it would have preferred to allow, it is a false positive. Techniques to avoiding detection are often called “obfuscation,” and the term is an appropriate one. It reflects not an attitude of security through obscurity; but rather a recognition that avoiding detection is about making the censor’s classification problem more difficult, and therefore more costly. Forcing the censor to trade false positives for false negatives is the core of all circumvention that is based on avoiding detection. The costs of misclassifications cannot be understood in absolute terms: they only have meaning relative to a specific censor and its resources and motivations. Understanding the relative importance that a censor assigns to classification errors—knowing what it prefers to allow and to block—is key to knowing what what kind of circumvention will be successful. Through good modeling, we can make the tradeoffs less favorable for the censor and more favorable for the circumventor.

The censor may base its classification decision on whatever criteria it finds practical. I like to divide detection techniques into two classes: detection by content and detection by address. Detection by content is based on the content or topic of the message: keyword filtering and protocol identification fall into this class. Detection by address is based on the sender or recipient of the message: IP address blacklists and DNS response tampering fall into this class. An “address” may be any kind of identifier: an IP address, a domain name, an email address. Of these two classes, my experience is that detection by address is harder to defeat. The distinction is not perfectly clear because there is no clear separation between what is content and what is an address: the layered nature of network protocols means that one layer’s address is another layer’s content. Nevertheless, I find it useful to think about detection techniques in these terms.

The censor may block the address of the destination, preventing direct access. Any communication between the client and the destination must therefore be indirect. The indirect link between client and destination is called a proxy, and it must do two things: provide an unblocked address for the client to contact; and somehow mask the contents of the channel and the eventual destination address. I will use the word “proxy” expansively to encompass any kind of intermediary, not only a single host implementing a proxy protocol such an HTTP proxy or SOCKS proxy. A VPN (virtual private network) is also a kind of proxy, as is the Tor network, as may be a specially configured network router. A proxy is anything that acts on a client’s behalf to assist in circumvention.

Proxies solve the first-order effects of censorship (detection by content and address), but they induce a second-order effect: the censor must now seek out and block proxies, in addition to the contents and addresses that are its primary targets. This is where circumvention research really begins: not with access to the destination per se, but with access to a proxy, which transitively gives access to the destination. The censor attempts to deal with detecting and blocking communication with proxies using the same tools it would for any other communication. Just as it may look for forbidden keywords in text, it may look for distinctive features of proxy protocols; just as it may block politically sensitive web sites, it may block the addresses of any proxies it can discover. The challenge for the circumventor is to use proxy addresses and proxy protocols that are difficult for the censor to detect or block.

The way of organizing censorship and circumvention techniques that I have presented is not the only one. Köpsell and Hillig [120 §4] divide detection into “content” and “circumstances”; their “circumstances” include addresses and also features that I consider more content-like: timing, data transfer characteristics, and protocols. Winter [198 §1.1] divides circumvention into three problems: bootstrapping, endpoint blocking, and traffic obfuscation. Endpoint blocking and traffic obfuscation correspond to my detection by address and detection by content; bootstrapping is the challenge of getting a copy of circumvention software and discovering initial proxy addresses. I tend to fold bootstrapping in with address-based detection; see Section 2.3. Khattak, Elahi, et al. break detection into four aspects [113 §2.4]: destinations, content, flow properties, and protocol semantics. I think of their “content,” “flow properties,” and “protocol semantics” as all fitting under the heading of content. My split between address and content mostly corresponds to Tschantz et al.’s “setup” and “usage” [182 §V] and Khattak, Elahi, et al.’s “communication establishment” and “conversation” [113 §3.1]. What I call “detection” and “blocking,” Khattak, Elahi, et al. call “fingerprinting” and “direct censorship” [113 §2.3], and Tschantz et al. call “detection” and “action” [182 §II].

A major difficulty in developing circumvention systems is that however much you model and try to predict the reactions of a censor, real-world testing is expensive. If you really want to test a design against a censor, not only must you write and deploy an implementation, integrate it with client-facing software like web browsers, and work out details of its distribution—you must also attract enough users to merit a censor’s attention. Any system, even a fundamentally broken one, will work to circumvent most censors, as long as it is used only by one or only a few clients. The true test arises only after the system has begun to scale and the censor to fight back. This phenomenon may have contributed to the unfortunate characterization of censorship and circumvention as a cat-and-mouse game: deploying a flawed circumvention system, watching it become more popular and then get blocked, then starting over again with another similarly flawed system. In my opinion, the cat-and-mouse game is not inevitable, but is a consequence of inadequate understanding of censors. It is possible to develop systems that resist blocking—not absolutely, but quantifiably, in terms of costs to the censor—even after they have become popular.

2.1 Collateral damage

What prevents the censor from shutting down all connectivity within its network, trivially preventing the client from reaching any destination? The answer is that the censor derives benefits from allowing network connectivity, other than the communications which it wants to censor. Or to put it another way: the censor incurs a cost when it overblocks: accidentally blocks something it would have preferred to allow. Because it wants to block some things and allow others, the censor is forced to run as a classifier. In order to avoid harm to itself, the censor permits some measure of circumvention traffic.

The cost of false positives is of so central importance to circumvention that researchers have a special term for it: collateral damage. The term is a bit unfortunate, evoking as it does negative connotations from other contexts. It helps to focus more on the “collateral” than the “damage”: collateral damage is any cost experienced by the censor as a result of incidental blocking done in the course of censorship. It must trade its desire to block forbidden communications against its desire to avoid harm to itself, balance underblocking with overblocking. Ideally, we force the censor into a dilemma: unable to distinguish between circumvention and other traffic, it must choose either to allow circumvention along with everything else, or else block everything and suffer maximum collateral damage. It is not necessary to reach this ideal fully before circumvention becomes possible. Better obfuscation drives up the censor’s error rate and therefore the cost of any blocking. Ideally, the potential “damage” is never realized, because the censor sees the cost as being too great.

Collateral damage, being an abstract “cost,” can take many forms. It may come in the form of civil discontent, as people try to access web sites and get annoyed with the government when unable to do so. It may be reduced productivity, as workers are unable to access resources they need to to their job. This is the usual explanation offered for why the Great Firewall of China has never blocked GitHub for for more than a few days, despite GitHub’s being used to host and distribute circumvention software: GitHub is so deeply integrated into software development, that programmers cannot get work done when it is blocked.

Collateral damage, as with other aspects of censorship, cannot be understood in isolation, but only in relation to a particular censor. Suppose that blocking one web site results in the collateral blocking of a hundred more. Is that a large amount of collateral damage? It depends. Are those other sites likely to be visited by clients in the censor’s network? Are they in the local language? Do professionals and officials rely on them to get their job done? Is someone in the censorship bureau likely to get fired as a result of their blocking? If the answers to these question is yes, then yes, the collateral damage is likely to be high. But if not, then the censor could take or leave those hundred sites—it doesn’t matter. Collateral damage is not just any harm that results from censorship, it is harm that is felt by the censor.

Censors may take actions to reduce collateral damage while still blocking most of what they intend to. (Another way to think of it is: reducing false positives without increasing false negatives.) For example, Winter and Lindskog [199], observed that the Great Firewall preferred to block individual ports, entire IP addresses, probably in a bid to reduce collateral damage. Local circumstances may serve to reduce collateral damage: for example if a domestic replacement exists for a foreign service, the censor may block the foreign service more easily.

The censor’s reluctance to cause collateral damage is what makes circumvention possible in general. (There are some exceptions, discussed in the next section, where the censor can detect but for some reason cannot block.) To deploy a circumvention system is to make a bet: that the censor cannot field a classifier that adequately distinguishes the traffic of the circumvention system from other traffic which, if blocked, would result in collateral damage. Even steganographic circumvention channels that mimic some other protocol ultimately derive their blocking resistance from the potential of collateral damage. For example, a protocol that imitates HTTP can be blocked by blocking HTTP—the question then is whether the censor can afford to block HTTP. And that’s in the best case, assuming that the circumvention protocol has no “tell” that enables the censor to distinguish it from the cover protocol it is trying to imitate. Indistinguishability is a necessary but not sufficient condition for blocking resistance: that which you are trying to be indistinguishable from must also have sufficient collateral damage. It’s no use to have a perfect steganographic imitation of a protocol that the censor doesn’t mind blocking.

In my opinion, collateral damage provides a more productive way to think about the behavior of censors than do alternatives. It takes into account different censors’ differing resources and motivations, and so is more useful for generic modeling. Moreover, it gets to the heart of what makes traffic resistant to blocking. There are other ways of characterizing censorship resistance. Many authors—Burnett et al. [25], and Jones et al. Jones2014a, for instance—call the essential element “deniability,” meaning that a client can plausibly claim to have been doing something other than circumventing when confronted with a log of their network activity. Khattak, Elahi, et al. [113 §4]consider “deniability” separately from “unblockability.” Houmansadr et al. [103, 104, 105] used the term “unobservability,” which I feel fails to capture the censor’s essential function of distinguishing, not only observation. Brubaker et al. [23] used the term “entanglement,” which I found enlightening. What they call entanglement I think of as indistinguishability—keeping in mind that that which you are trying to be indistinguishable from must be valued by the censor. Collateral damage provides a way to make statements about censorship resistance quantifiable, at least in a loose sense. Rather than saying, “the censor cannot block X,” or even, “the censor is unwilling to block X,” it is better to say “in order to block X, the censor would have to do Y,” where Y is some action bearing a cost for the censor. A statement like this makes it clear that some censors may be able to afford the cost of blocking and others may not; there is no “unblockability” in absolute terms. Now, actually quantifying the value of Y is a task in itself, by no means a trivial one. A challenge for future work in this field is to assign actual numbers (e.g., in dollars) to the costs borne by censors. If a circumvention system becomes blocked, it may simply mean that the circumventor overestimated the collateral damage or underestimated the censor’s capacity to absorb it.

We have observed that the risk of collateral damage is what prevents the censor from shutting down the network completely—and yet, censors do occasionally enact shutdowns or daily “curfews.” Shutdowns are costly—West [191] looked at 81 shutdowns in 19 countries in 2015 and 2016, and estimated that they collectively cost $2.4 billion in losses to gross domestic product. Deloitte [40]estimated that shutdowns cost millions of dollars per day per 10 million population, the amount depending on a country’s level of connectivity. This does not necessarily contradict the theory of collateral damage. It is just that, in some cases, a censor reckons that the benefits of a shutdown outweigh the costs. As always, the outcome depends on the specific censor: censors that don’t benefit as much from the Internet don’t have as much to lose by blocking it. The fact that shutdowns are limited in duration shows that even censors that can afford to a shutdown cannot afford to keep it up forever.

Complicating everything is the fact that censors are not bound to act rationally. Like any other large, complex entity, a censor is prone to err, to act impetuously, to make decisions that cause more harm than good. The imposition of censorship in the first place, I suggest, is exactly such an irrational action, retarding progress at the greater societal level.

2.2 Content obfuscation strategies

There are two general strategies to counter content-based detection. The first is to mimic some content that the censor allows, like HTTP or email. The second is to randomize the content, making it dissimilar to anything that the censor specifically blocks.

Tschantz et al. [182] call these two strategies “steganography” and “polymorphism” respectively. It is not a strict categorization—any real system will incorporate a bit of both. The two strategies reflect they reflect differing conceptions of censors. Steganography works against a “whitelisting” or “default-deny” censor, one that permits only a set of specifically enumerated protocols and blocks all others. Polymorphism, on the other hand, fails against a whitelisting censor, but works against a “blacklisting” or “default-allow” censor, one that blocks a set of specifically enumerated protocols and allows all others.

This is not to say that steganography is strictly superior to polymorphism—there are tradeoffs in both directions. Effective mimicry can be difficult to achieve, and in any case its effectiveness can only be judged against a censor’s sensitivity to collateral damage. Whitelisting, by its nature, tends to cause more collateral damage than blacklisting. And just as obfuscation protocols are not purely steganographic or polymorphic, real censors are not purely whitelisting or blacklisting. Houmansadr et al. [103] exhibited weaknesses in “parrot” circumvention systems that imperfectly mimic a cover protocol. Mimicking a protocol in every detail, down to its error behavior, is difficult, and any inconsistency is a potential feature that a censor may exploit. Wang et al. [186] found that some of the proposed attacks against parrot systems would be impractical due to high false-positive rates, but offered other attacks designed for efficiency and low false positives. Geddes et al. [95] showed that even perfect imitation may leave vulnerabilities due to mismatches between the cover protocol and the carried protocol. For instance, randomly dropping packets may disrupt circumvention more than normal use of the cover protocol. It’s worth noting, though, that apart from active probing and perhaps entropy measurement, most of the attacks proposed in academic research have not been used by censors in practice.

Some systematizations (for example those of Brubaker et al. [23 §6]; Wang et al. [186 §2]; and Khattak, Elahi, et al. [113 §6.1]) further subdivide steganographic systems into those based on mimicry (attempting to replicate the behavior of a cover protocol) and tunneling (sending through a genuine implementation of the cover protocol). I do not find the distinction very useful, except when discussing concrete implementation choices. To me, there is no clear division: there are various degrees of fidelity in imitation, and tunneling only tends to offer higher fidelity than does mimicry.

I will list some circumvention systems that represent the steganographic strategy. Infranet [62], way back in 2002, built a covert channel within HTTP, encoding upstream data as crafted requests and downstream data as steganographic images. StegoTorus [190] uses custom encoders to make traffic resemble common HTTP file types, such as PDF, JavaScript, and Flash. SkypeMorph [139] mimics a Skype video call. FreeWave [105] modulates a data stream into an acoustic signal and transmits it over VoIP. Format-transforming encryption, or FTE [58], force traffic to conform to a user-specified syntax: if you can describe it, you can imitate it. Despite receiving much research attention, steganographic systems have not been as used in practice as polymorphic ones. Of the listed systems, only FTE has seen substantial deployment.

There are many examples of the randomized, polymorphic strategy. An important subclass of these comprises the so-called look-like-nothing systems that encrypt a stream without any plaintext header or framing information, so that it appears to be a uniformly random byte sequence. A pioneering design was the obfuscated-openssh of Bruce Leidl [122], which aimed to hide the plaintext packet metadata in the SSH protocol. obfuscated-openssh worked, in essence, by first sending an encryption key, and then sending ciphertext encrypted with that key. The encryption of the obfuscation layer was an additional layer, independent of SSH’s ordinary encryption. A censor could, in principle, passively detect and deobfuscate the protocol by recovering the key and using it to decrypt the rest of the stream. obfuscated-openssh could optionally incorporate a pre-shared password into the key derivation function, which would protect against this attack. Dust [195], similarly randomized bytes (at least in its v1 version—later versions permitted fitting to distributions other than uniform). It was not susceptible to passive deobfuscation because it required an out-of-band key exchange to happen before each session. Shadowsocks [170] is a lightweight encryption layer atop a simple proxy protocol.

There is a line of successive look-like-nothing protocols—obfs2, obfs3, ScrambleSuit, and obfs4—which I like because they illustrate the mutual advances of censors and circumventors over several years. obfs2 [110], which debuted in 2012 in response to blocking in Iran [43], uses very simple obfuscation inspired by obfuscated-openssh: it is essentially equivalent to sending an encryption key, then the rest of the stream encrypted with that key. obfs2 is detectable, with no false negatives and negligible false positives, by even a passive censor who knows how it works; and it is vulnerable to active probing attacks, where the censor speculatively connects to servers to see what protocols they use. However, it sufficed against the keyword- and pattern-based censors of its era. obfs3 [111]—first available in 2013 but not really released to users until 2014 [152]—was designed to fix the passive detectability of its predecessor. obfs3 employs a Diffie–Hellman key exchange that prevents easy passive detection, but it can still be subverted by an active man in the middle, and remains vulnerable to active probing. (The Great Firewall of China had begun active-probing for obfs2 by January 2013, and for obfs3 by August 2013—see Table 4.2.) ScrambleSuit [200], first available to users in 2014 [29], arose in response to the active-probing of obfs3. Its innovations were the use of an out-of-band secret to authenticate clients, and traffic shaping techniques to perturb the underlying stream’s statistical properties. When a client connects to a ScrambleSuit proxy, it must demonstrate knowledge of the out-of-band secret before the proxy will respond, which prevents active probing. obfs4 [206], first available in 2014 [154], is an incremental advancement on ScrambleSuit that uses more efficient cryptography, and additionally authenticates the key exchange to prevent active man-in-the-middle attacks.

There is an advantage in designing polymorphic protocols, as opposed to steganographic ones, which is that every proxy can potentially have its own characteristics. ScrambleSuit and obfs4, in addition to randomizing packet contents, also shape packet sizes and timing to fit random distributions. Crucially, the chosen distributions are consistent within each proxy, but vary across proxies. That means that even if a censor is able to build a profile for a particular proxy, it is not necessarily useful for detecting other instances.

2.3 Address blocking resistance strategies

The first-order solution for reaching a destination whose address is blocked is to instead route through a proxy. But a single, static proxy is not much better than direct access, for circumvention purposes—a censor can block the proxy just as easily as it can block the destination. Circumvention systems must come up with ways of addressing this problem.

There are two reasons why resistance to blocking by address is challenging. The first is due to the nature of network routing: the client must, somehow, encode the address of the destination into the messages it sends. The second is the insider attack: legitimate clients must have some way to discover the addresses of proxies. By pretending to be a legitimate client, the censor can learn those addresses in the same way.

Compared to content obfuscation, there are relatively few strategies for resistance to blocking by address. They are basically five:

- sharing private proxies among only a few clients

- having a large population of secret proxies and distributing them carefully

- having a very large population of proxies and treating them as disposable

- proxying through a service with high collateral damage

- address spoofing

The simplest proxy infrastructure is no infrastructure at all: require every client to set up and maintain a proxy for their own personal use, or for a few of their friends. As long as the use of any single address remains low, it may escape the censor’s notice [49 §4.2]. The problem with this strategy, of course, is usability and scalability. If it were easy for everyone to set up their own proxy on an unblocked address, they would do it, and blocking by address would not be a concern. The challenge is making such techniques general so they are usable by more than experts. uProxy [184] is now working on just that: automating the process of setting up a proxy on a server.

What Köpsell and Hillig call the “many access points” model [120 §5.2] has been adopted in some form by many circumvention systems. In this model, there are many proxies in operation. They may be full-fledged general-purpose proxies, or only simple forwarders to a more capable proxy. They may be operated by volunteers or coordinated centrally. In any case, the success of the system hinges on being able to sustain a population of proxies, and distribute information about them to legitimate users, without revealing too many to the censor. Both of these considerations pose challenges.

Tor’s blocking resistance design [49], based on secret proxies called “bridges,” was of this kind. Volunteers run bridges, which report themselves to a central database called BridgeDB [181]. Clients contact BridgeDB through some unblocked out-of-band channel (HTTPS, email, or word of mouth) in order to learn bridge addresses. The BridgeDB server takes steps to prevent the easy enumeration of its database [124]. Each request returns only a small set of bridges, and repeated requests by the same client return the same small set (keyed by a hash of the client’s IP address prefix or email address). Requests through the HTTPS interface require the client to solve a captcha, and email requests are honored only from the domains of email providers that are known to limit the rate of account creation. The population of bridges is partitioned into “pools”—one pool for HTTPS distribution, one for email, and so on—so that if an adversary manages to enumerate one of the pools, it does not affect the bridges of the others. But even these defenses may not be enough. Despite public appeals for volunteers to run bridges (for example Dingledine’s initial call in 2007 [44]), there have never been more than a few thousand of them, and Dingledine reported in 2011 that the Great Firewall of China managed to enumerate both the HTTPS and email pools [45 §1, 46 §1].

Tor relies on BridgeDB to provide address blocking resistance for all its transports that otherwise have only content obfuscation. And that is a great strength of such a system. It enables, to some extent, content obfuscation to be developed independently, and rely on an existing generic proxy distribution mechanism in order to produce an overall working system. There is a whole line of research, in fact, on the question of how best to distribute information about an existing population of proxies, which is known as the “proxy distribution problem” or “proxy discovery problem.” Proposals such as Proximax [134], rBridge [188], and Salmon [54] aim to make proxy distribution robust by tracking the reputation of clients and the unblocked lifetimes of proxies.

A way to make proxy distribution more robust against censors (but at the same time less usable by clients) is to “poison” the set of proxy addresses with the addresses of important servers, the blocking of which would result in high collateral damage. VPN Gate employed this idea [144 §4.2], mixing into the their public proxy list the addresses of root DNS servers and Windows Update servers.

Apart from “in-band” discovery of bridges via subversion of a proxy distribution system, one must also worry about “out-of-band” discovery, for example by mass scanning [46 §6, 49 §9.3]. Durumeric et al. found about 80% of existing (unobfuscated) Tor bridges [57 §4.4] by scanning all of IPv4 on a handful of common bridge ports. Matic et al. had similar results in 2017 [133 §V.D], using public search engines in lieu of active scanning. The best solution to the scanning problem is to do as ScrambleSuit [200], obfs4 [206], and Shadowsocks [170] do, and associate with each proxy a secret, without which a scanner cannot initiate a connection. Scanning for bridges is closely related to active probing, the topic of Chapter 4.

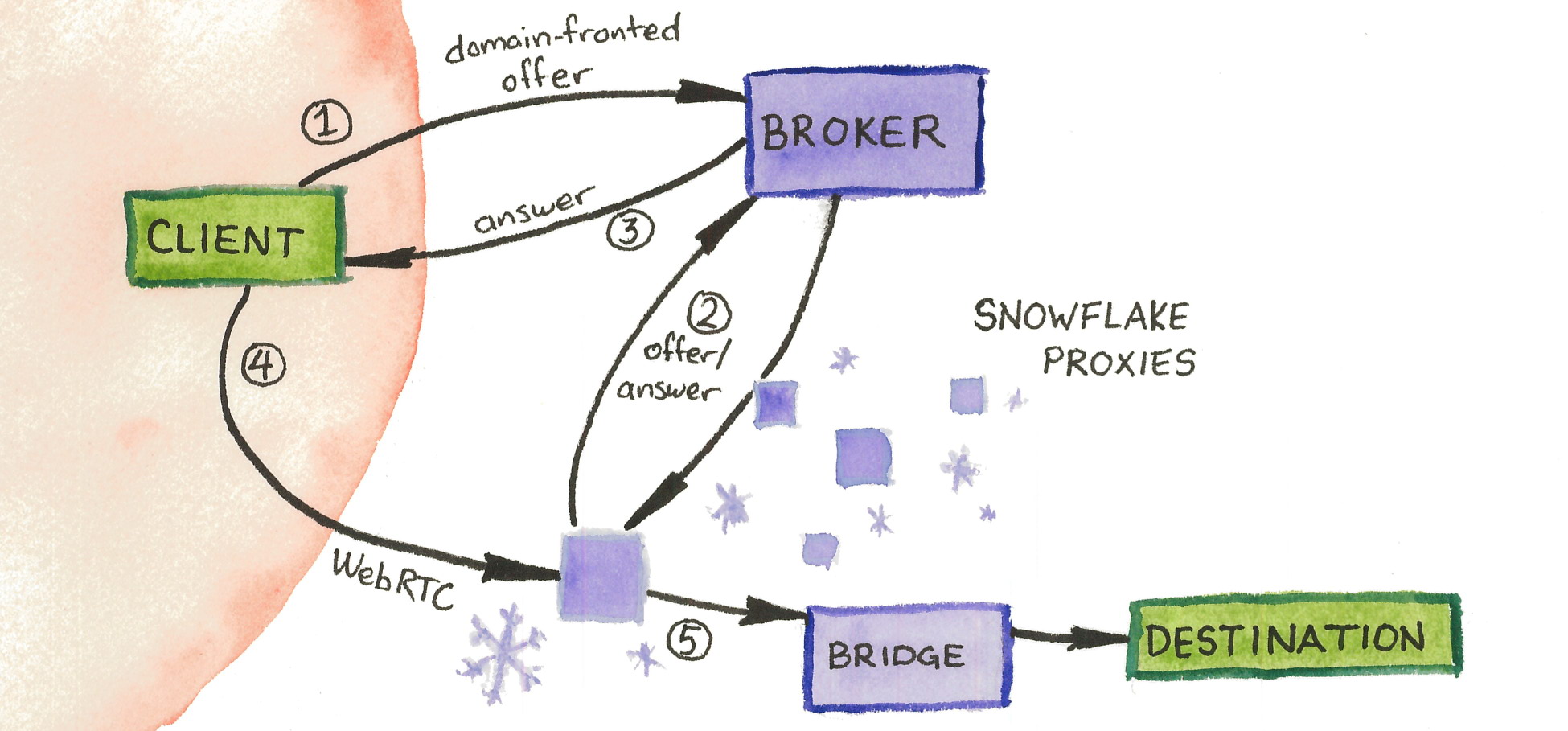

Another way of achieving address blocking resistance is to treat proxies as temporary and disposable, rather than permanent and valuable. This is the idea underlying flash proxy [84] and Snowflake (Chapter 7). Most proxy distribution strategies are designed around proxies lasting at least on the order days. In contrast, disposable proxies may last only minutes or hours. Setting up a Tor bridge or even something lighter-weight like a SOCKS proxy still requires installing some software on a server somewhere. The proxies of flash proxy and Snowflake have a low set-up and tear-down cost: you can run one just by visiting a web page. These designs do not need a sophisticated proxy distribution strategy as long as the rate of proxy creation is kept higher than the censor’s rate of discovery.

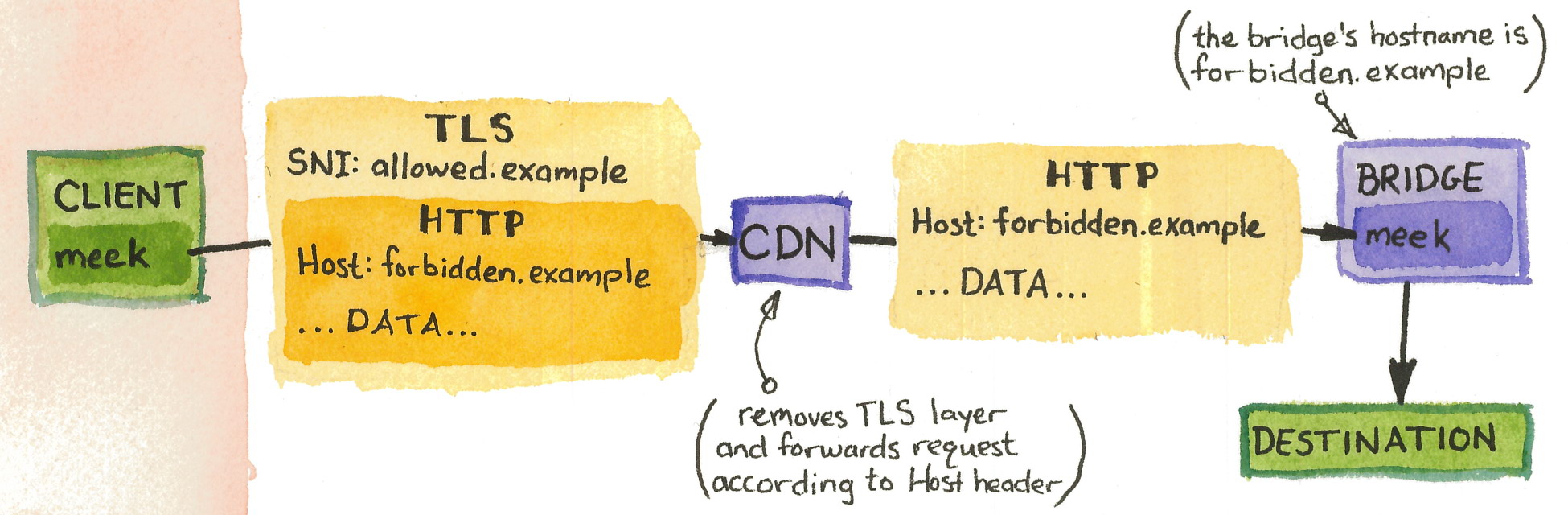

The logic behind diffusing many proxies widely is that a censor would have to block large swaths of the Internet in order to effectively block them. However, it also makes sense to take the opposite tack: have just one or a few proxies, but choose them to have high enough collateral damage that the censor does not dare block them. Refraction networking [160] puts proxy capability into network routers—in the middle of paths, rather than at the end. Clients cryptographically tag certain flows in a way that is invisible to the censor but detectable to a refraction-capable router, which redirects from its apparent destination to some other, covert destination. In order to prevent circumvention, the censor has to induce routes that avoid the special routers [168], which is costly [106]. Domain fronting [89] has similar properties. Rather than a router, it uses another kind of network intermediary: a content delivery network. Using properties of HTTPS, a client may request one site while appearing (to the censor) to request another. Domain fronting is the topic of Chapter 6. The big advantage of this general strategy is that the proxies do not need to be kept secret from the censor.

The final strategy for address blocking resistance is address spoofing. The notable design in this category is CensorSpoofer [187]. A CensorSpoofer client never communicates directly with a proxy. It sends upstream data through a low-bandwidth, indirect channel such as email or instant messaging, and downstream data through a simulated VoIP conversation, spoofed to appear as if it were coming from some unrelated dummy IP address. The asymmetric design is feasible because of the nature of web browsing: typical clients send much less than they receive. The client never even needs to know the actual address of the proxy, meaning that CensorSpoofer has high resistance to insider attack: even running the same software as a legitimate client, the censor does not learn enough information to effect a block. The idea of address spoofing goes back farther; as early as 2001, TriangleBoy [167]employed lighter-weight intermediate proxies that simply forwarded client requests to a long-lived proxy at a static, easily blockable address. In the downstream direction, the long-lived proxy would, rather than route back through the intermediate proxy, only spoof its responses to look as if they came from proxy. TriangleBoy did not match CensorSpoofer’s resistance to insider attack, because clients still needed to find and communicate directly with a proxy, so the whole system basically reduced to the proxy discovery problem, despite the use of address spoofing.

2.4 Spheres of influence and visibility

It is usual to assume, conservatively, that whatever the censor can detect, it also can block; that is, to ignore blocking per se and focus only on the detection problem. We know from experience, however, that there are cases in practice where a censor’s reach exceeds its grasp: where it is able to detect circumvention but for some reason cannot block it. It may be useful to consider this possibility when modeling. Khattak, Elahi, et al. [113] express it nicely by subdividing the censor’s network into a sphere of influence within which the censor has active control, and a potentially larger sphere of visibilitywithin which the censor may only observe, but not act.

A landmark example of this kind of thinking is the 2006 research on “Ignoring the Great Firewall of China” by Clayton et al. [31]. They found that the firewall would block connections by injecting phony TCP RST packets (which cause the connection to be torn down) or SYN/ACK packets (which cause the connection to become unsynchronized), and that simply ignoring the anomalous packets rendered blocking ineffective. (Why did the censor choose to inject its own packets, rather than drop those of the client or server? The answer is probably that injection is technically easier to achieve, highlighting a limit on the censor’s power.) One can think of this ignoring as shrinking the censor’s sphere of influence: it can still technically act within this sphere, but not in a way that actually achieves blocking. Additionally, intensive measurements revealed many failures to block, and blocking rates that changed over time, suggesting that even when the firewall intends a policy of blocking, it does not always succeed.

Another fascinating example of “look, but don’t touch” communication is the “filecasting” technique used by Toosheh [142], a file distribution service based on satellite television broadcasts. Clients tune their satellite receivers to a certain channel and record the broadcast to a USB flash drive. Later, they run a program on the recording that decodes the information and extracts a bundle of files. The system is unidirectional: clients can only receive the files that the operators choose to provide. The censor can easily see that Toosheh is in use—it’s a broadcast, after all—but cannot identify users, or block the signal in any way short of continuous radio jamming or tearing down satellite dishes.

There are parallels between the study of Internet censorship and that of network intrusion detection. One is that a censor’s detector may be implemented as a network intrusion detection system or monitor, a device “on the side” of a communication link that receives a copy of the packets that flow over the link, but that, unlike a router, is not responsible for forwarding the packets onward. Another parallel is that censors are susceptible to the same kinds of evasion and obfuscation attacks that affect network monitors more generally. In 1998, Ptacek and Newsham [158] and Paxson [149 §5.3]outlined various attacks against network intrusion detection systems—such as manipulating the IP time-to-live field or sending overlapping IP fragments—that cause a monitor either to accept what the receiver will reject, or reject what the receiver will accept. A basic problem is that a monitor’s position in the middle of the network does not enable it to predict exactly how each packet will be interpreted by the endpoints. Cronin et al. [36] posit that the monitor’s conflicting goals of sensitivity (recording all that is relevant) and selectivity (recording only what is relevant) give rise to an unavoidable “eavesdropper’s dilemma.”

Monitor evasion techniques can be used to reduce a censor’s sphere of visibility—remove certain traffic features from its consideration. Crandall et al. [33] in 2007 suggested using IP fragmentation to prevent keyword matching. In 2008 and 2009, Park and Crandall [148] explicitly characterized the Great Firewall as a network intrusion detection system and found that a lack of TCP reassembly allowed evading keyword matching. Winter and Lindskog [199] found that the Great Firewall still did not do TCP segment reassembly in 2012. They released a tool, brdgrd [196], that by manipulating the TCP window size, prevented the censor’s scanners from receiving a full response in the first packet, thereby foiling active probing. Anderson [9] gave technical information on the implementation of the Great Firewall as it existed in 2012, and observed that it is implemented as an “on-the-side” monitor.Khattak et al. [114] applied a wide array of evasion experiments to the Great Firewall in 2013, identifying classes of working evasions and estimating the cost to counteract them. Wang et al. [189]did further evasion experiments against the Great Firewall a few years later, finding that the firewall had evolved to prevent some previous evasion techniques, and discovering new ones.

2.5 Early censorship and circumvention

Internet censorship and circumvention began to rise to importance in the mid-1990s, coinciding with the popularization of the World Wide Web. Even before national-level censorship by governments became an issue, researchers investigated the blocking policies of personal firewall products—those intended, for example, for parents to install on the family computer. Meeks and McCullagh [138]reported in 1996 on the secret blocking lists of several programs. Bennett Haselton and Peacefire [100] found many cases of programs blocking more than they claimed, including web sites related to politics and health.

Governments were not far behind in building legal and technical structures to control the flow of information on the web, in some cases adapting the same technology originally developed for personal firewalls. The term “Great Firewall of China” first appeared in an article in Wired [15] in 1997. In the wake of the first signs of blocking by ISPs, people were thinking about how to bypass filters. The circumvention systems of that era were largely HTML-rewriting web proxies: essentially a form on a web page into which a client would enter a URL. The server would fetch the desired page on behalf of the client, and before returning the response, rewrite all the links and external references in the page to make them relative to the proxy. CGIProxy [131], SafeWeb [132], Circumventor [99], and the first version of Psiphon [28] were all of this kind.

These systems were effective against their censors of their day—at least with respect to the blocking of destinations. They had the major advantage of requiring no special client-side software other than a web browser. The difficulty they faced was second-order blocking as censors discovered and blocked the proxies themselves. Circumvention designers deployed some countermeasures; for example Circumventor had a mailing list [49 §7.4] which would send out fresh proxy addresses every few days. A 1996 article by Rich Morin [140] presented a prototype HTML-rewriting proxy called Rover, which eventually became CGIProxy. The article predicted the failure of censorship based on URL or IP address, as long as a significant fraction of web servers ran such proxies. That vision has not come to pass. Accumulating a sufficient number of proxies and communicating their addresses securely to clients—in short, the proxy distribution problem—turned out not to follow automatically, but to be a major sub-problem of its own.

Threat models had to evolve along with censor capabilities. The first censors would be considered weak by today’s standards, mostly easy to circumvent by simple countermeasures, such as tweaking a protocol or using an alternative DNS server. (We see the same progression play out again when countries first begin to experiment with censorship, such as in Turkey in 2014, where alternative DNS servers briefly sufficed to circumvent a block of Twitter [35].) Not only censors were changing—the world around them was changing as well. In field of circumvention, which is so heavily affected by concerns about collateral damage, the milieu in which censors operate is as important as the censors themselves. A good example of this is the paper on Infranet, the first academic circumvention design I am aware of. Its authors argued, not unreasonably for 2001, that TLS would not suffice as a cover protocol [62 §3.2], because the relatively few TLS-using services at that time could all be blocked without much harm. Certainly the circumstances are different today—domain fronting and all refraction networking schemes require the censor to permit TLS. As long as circumvention remains relevant, it will have to change along with changing times, just as censors do.

Chapter 3

Understanding censors

The main tool we have to build relevant threat models is the study of censors. The study of censors is complicated by difficulty of access: censors are not forthcoming about their methods. Researchers are obligated to treat censors as a black box, drawing inferences about their internal workings from their externally visible characteristics. The easiest thing to learn is the censor’s what—the destinations and contents that are blocked. Somewhat harder is the investigation into where and how, the specific technical mechanisms used to effect censorship and where they are deployed in the network. Most difficult to infer is the why, the motivations and goals that underlie an apparatus of censorship.

From past measurement studies we may draw a few general conclusions. Censors change over time, and not always in the direction of more restrictions. Censorship differs greatly across countries, not only in subject but in mechanism and motivation. However it is reasonable to assume a basic set of capabilities that many censors have in common:

- blocking of specific IP addresses and ports

- control of default DNS servers

- blocking DNS queries

- injection of false DNS responses

- injection of TCP RSTs

- keyword filtering in unencrypted contents

- application protocol parsing (“deep packet inspection”)

- participation in a circumvention system as a client

- scanning to discover proxies

- throttling connections

- temporary total shutdowns

Not all censors will be able—or motivated—to do all of these. As the amount of traffic to be handled increases, in-path attacks such as throttling become relatively more expensive. Whether a particular act of censorship even makes sense will depend on a local cost–benefit analysis, a weighing of the expected gains against the potential collateral damage. Some censors may be able to tolerate a brief total shutdown, while for others the importance of Internet connectivity is too great for such a blunt instrument.

The Great Firewall of China (GFW), because of its unparalleled technical sophistication, is tempting to use as a model adversary. There has indeed been more research focused on China than any other country. But the GFW is in many ways an outlier, and not representative of other censors. A worldwide view is needed.

Building accurate models of censor behavior is not only needed for the purpose of circumvention. It also has implications for ethical measurement [108 §2, 202 §5]. For example, a common way to test for censorship is to ask volunteers to run software that connects to potentially censored destinations and records the results. This potentially puts volunteers at risk. Suppose the software accesses a destination that violates local law. Could the volunteer be held liable for the access? Quantifying the degree of risk depends on modeling how a censor will react to a given stimulus [32 §2.2].

3.1 Censorship measurement studies

A large part of research on censorship is composed of studies of censor behavior in the wild. In this section I summarize past studies, which, taken together, present a picture of censor behavior in general. They are based on those in an evaluation study done by me and others in 2016 [182 §IV.A]. The studies are diverse and there are many possible ways to categorize them. Here, I have divided them into one-time experiments and generic measurement platforms.

One-shot studies

One of the earliest technical studies of censorship occurred in a place you might not expect, the German state of North Rhein-Westphalia. Dornseif [52] tested ISPs’ implementation of a controversial legal order to block web sites circa 2002. While there were many possible ways to implement the block, none were trivial to implement, nor free of overblocking side effects. The most popular implementation used DNS tampering, which is returning (or injecting) false responses to DNS requests for the blocked sites. An in-depth survey of DNS tampering found a variety of implementations, some blocking more and some blocking less than required by the order. This time period seems to mark the beginning of censorship by DNS tampering in general; Dong [51] reported it in China in late 2002.

Zittrain and Edelman [208] used open proxies to experimentally analyze censorship in China in late 2002. They tested around 200,000 web sites and found around 19,000 of them to be blocked. There were multiple methods of censorship: web server IP address blocking, DNS server IP address blocking, DNS poisoning, and keyword filtering.

Clayton [30] in 2006 studied a “hybrid” blocking system, CleanFeed by the British ISP BT, that aimed for a better balance of costs and benefits: a “fast path” IP address and port matcher acted as a prefilter for the “slow path,” a full HTTP proxy. The system, in use since 2004, was designed to block access to any of a secret list of web sites. The system was vulnerable to a number of evasions, such a using a proxy, using an alternate IP address or port, and obfuscating URLs. The two-level nature of the blocking system unintentionally made it an oracle that could reveal the IP addresses of sites in the secret blocking list.

In 2006, Clayton, Murdoch, and Watson [31] further studied the technical aspects of the Great Firewall of China. They relied on an observation that the firewall was symmetric, treating incoming and outgoing traffic equally. By sending web requests from outside the firewall to a web server inside, they could provoke the same blocking behavior that someone on the inside would see. They sent HTTP requests containing forbidden keywords that caused the firewall to inject RST packets towards both the client and server. Simply ignoring RST packets (on both ends) rendered the blocking mostly ineffective. The injected packets had inconsistent TTLs and other anomalies that enabled their identification. Rudimentary countermeasures, such as splitting keywords across packets, were also effective in avoiding blocking. The authors brought up an important point that would become a major theme of future censorship modeling: censors are forced to trade blocking effectiveness against performance. In order to cope with high load at a reasonable costs, censors may employ the “on-path” architecture of a network monitor or intrusion detection system; i.e., one that can passively monitor and inject packets, but cannot delay or drop them.

Contemporaneous studies of the Great Firewall by Wolfgarten [201] and Tokachu [175] found cases of DNS tampering, search engine filtering, and RST injection caused by keyword detection. In 2007, Lowe, Winters, and Marcus [125] did detailed experiments on DNS tampering in China. They tested about 1,600 recursive DNS servers in China against a list of about 950 likely-censored domains. For about 400 domains, responses came back with bogus IP addresses, chosen from a set of about 20 distinct IP addresses. Eight of the bogus addresses were used more than the others: a whois lookup placed them in Australia, Canada, China, Hong Kong, and the U.S. By manipulating the IP time-to-live field, the authors found that the false responses were injected by an intermediate router, evidenced by the fact that the authentic response would be received as well, only later. A more comprehensive survey [12] of DNS tampering occurred in 2014, giving remarkable insight into the internal structure of the censorship machines. DNS injection happened only at border routers. IP ID and TTL analysis showed that each node was a cluster of several hundred processes that collectively injected censored responses. They found 174 bogus IP addresses, more than previously documented, and extracted a blacklist of about 15,000 keywords.

The Great Firewall, because of its unusual sophistication, has been an enduring object of study. Part of what makes it interesting is its many blocking modalities, both active and passive, proactive and reactive. The ConceptDoppler project of Crandall et al. [33] measured keyword filtering by the Great Firewall and showed how to discover new keywords automatically by latent semantic analysis, using the Chinese-language Wikipedia as a corpus. They found limited statefulness in the firewall: sending a naked HTTP request without a preceding SYN resulted in no blocking. In 2008 and 2009, Park and Crandall [148] further tested keyword filtering of HTTP responses. Injecting RST packets into responses is more difficult than doing the same to requests, because of the greater uncertainty in predicting TCP sequence numbers once a session is well underway. In fact, RST injection into responses was hit or miss, succeeding only 51% of the time, with some, apparently diurnal, variation. They also found inconsistencies in the statefulness of the firewall. Two of ten injection servers would react to a naked HTTP request; that it, one sent outside of an established TCP connection. The remaining eight of ten required an established TCP connection. Xu et al. [204] continued the theme of keyword filtering in 2011, with the goal of discovering where filters are located at the IP and autonomous system levels. Most filtering is done at border networks (autonomous systems with at least one peer outside China). In their measurements, the firewall was fully stateful: blocking was never triggered by an HTTP request outside an established TCP connection. Much filtering occurred at smaller regional providers, rather than on the network backbone. Anderson [9] gave a detailed description of the design of the Great Firewall in 2012. He described IP address blocking by null routing, RST injection, and DNS poisoning, and documented cases of collateral damage affecting clients inside and outside China.

Dainotti et al. [37] reported on the total Internet shutdowns that took place in Egypt and Libya in the early months of 2011. They used multiple measurements to document the outages as they occurred. During the shutdowns, they measured a drop in scanning traffic (mainly from the Conficker botnet). By comparing these different measurements, they showed that the shutdown in Libya was accomplished in more than one way, both by altering network routes and by firewalls dropping packets.

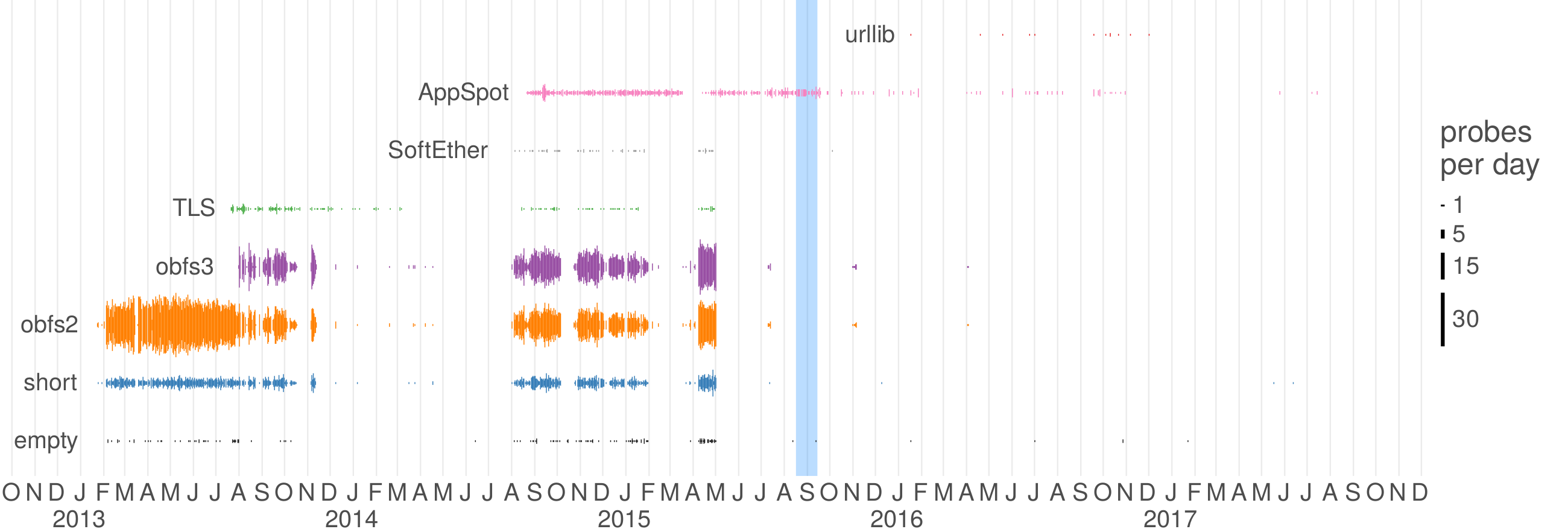

Winter and Lindskog [199], and later Ensafi et al. [60] did a formal investigation into active probing, a reported capability of the Great Firewall since around October 2011. They focused on the firewall’s probing of Tor and its most common pluggable transports.

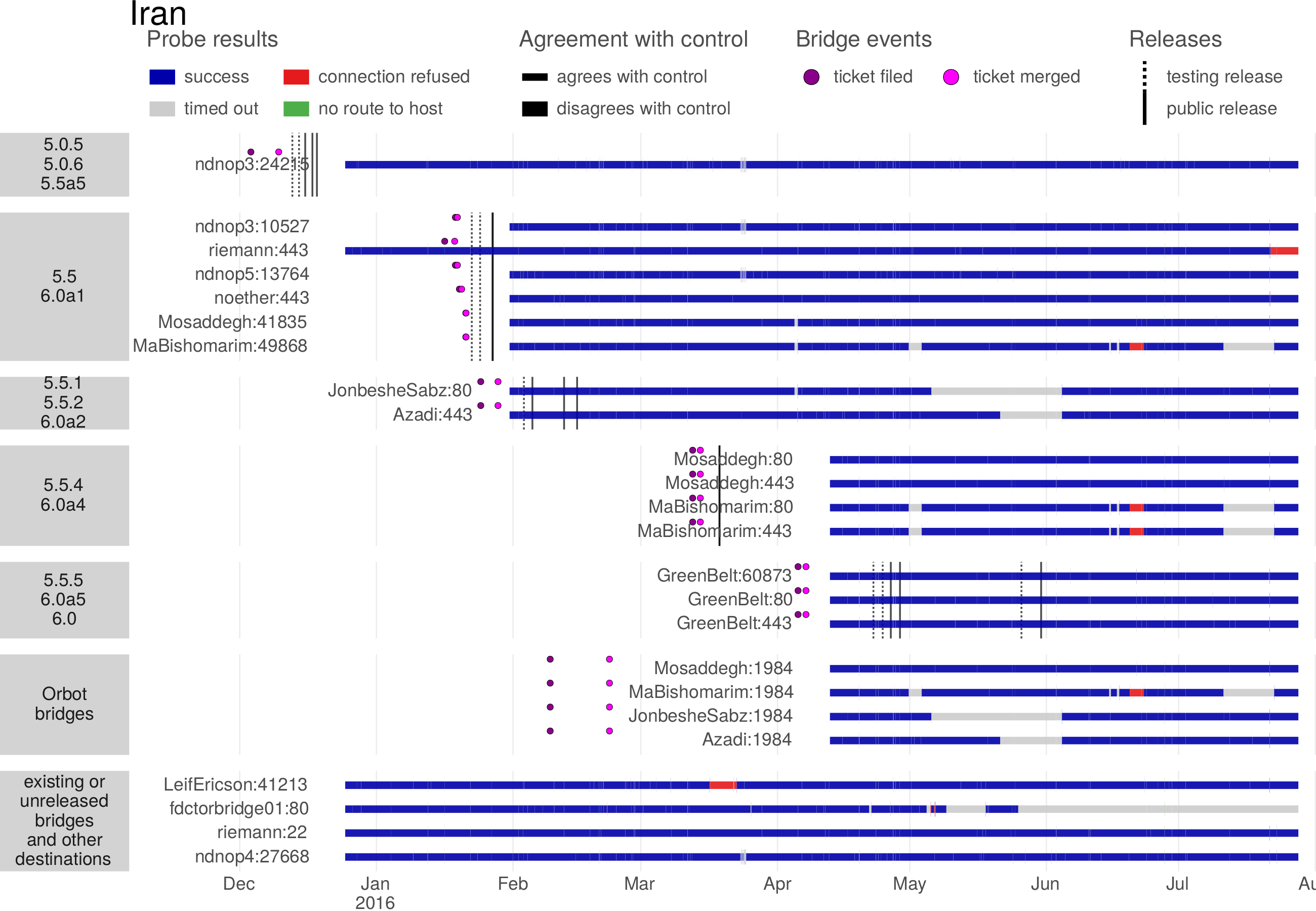

Anderson [6] documented network throttling in Iran, which occurred over two major periods between 2011 and 2012. Throttling degrades network access without totally blocking it, and is harder to detect than blocking. Academic institutions were affected by throttling, but less so than other networks. Aryan et al. [14] tested censorship in Iran during the two months before the June 2013 presidential election. They found multiple blocking methods: HTTP request keyword filtering, DNS tampering, and throttling. The most usual method was HTTP request filtering; DNS tampering (directing to a blackhole IP address) affected only the three domains facebook.com, youtube.com, and plus.google.com. SSH connections were throttled down to about 15% of the link capacity, while randomized protocols were throttled almost down to zero, 60 seconds into a connection’s lifetime. Throttling seemed to be achieved by dropping packets, which causes TCP to slow down.

Khattak et al. [114] evaluated the Great Firewall from the perspective that it works like an intrusion detection system or network monitor, and applied existing techniques for evading a monitor to the problem of circumvention. They looked particularly for ways to evade detection that are expensive for the censor to remedy. They found that the firewall was stateful, but only in the client-to-server direction. The firewall was vulnerable to a variety of TCP- and HTTP-based evasion techniques, such as overlapping fragments, TTL-limited packets, and URL encodings.

Nabi [141] investigated web censorship in Pakistan in 2013, using a publicly available list of banned web sites. Over half of the sites on the list were blocked by DNS tampering; less than 2% were additionally blocked by HTTP filtering (an injected redirect before April 2013, or a static block page after that). They conducted a small survey to find the most commonly used circumvention methods; the most common was public VPNs, at 45% of respondents. Khattak et al. [115] looked at two censorship events that took place in Pakistan in 2011 and 2012. Their analysis is special because unlike most studies of censorship, theirs uses traffic traces taken directly from an ISP. They observe that users quickly switched to TLS-based circumvention following a block of YouTube. The blocks had side effects beyond a loss of connectivity: the ISP had to deal with more ciphertext than before, and users turned to alternatives for the blocked sites. Their survey found that the most common method of circumvention was VPNs. Aceto and Pescapè [2] revisited Pakistan in 2016. Their analysis of six months of active measurements in five ISPs showed that blocking techniques differed across ISPs; some used DNS poisoning and others used HTTP filtering. They did their own survey of commonly used circumvention technologies, and again the winner was VPNs with 51% of respondents.

Ensafi et al. [61] employed an intriguing technique to measure censorship from many locations in China—a “hybrid idle scan.” The hybrid idle scan allows one to test TCP connectivity between two Internet hosts, without needing to control either one. They selected roughly uniformly geographically distributed sites in China from which to measure connectivity to Tor relays, Tor directory authorities, and the web servers of popular Chinese web sites. There were frequent failures of the firewall resulting in temporary connectivity, typically occurring in bursts of hours.

In 2015, Marczak et al. [129] investigated an innovation in the capabilities of the border routers of China, an attack tool dubbed the Great Cannon. The cannon was responsible for denial-of-service attacks on Amazon CloudFront and GitHub. The unwitting participants in the attack were web browsers located outside of China, who began their attack when the cannon injected malicious JavaScript into certain HTTP responses originating inside of China. The new attack tool was noteworthy because it demonstrated previously unseen in-path behavior, such as packet dropping.

A major aspect of censor modeling is that many censors use commercial firewall hardware. Dalek et al. [39], Dalek et al. [38], and Marquis-Boire et al. [130] documented the use of commercial firewalls made by Blue Coat, McAfee, and Netsweeper in a number of countries. Chaabane et al. [27]analyzed 600 GB of leaked logs from Blue Coat proxies that were being used for censorship in Syria. The logs cover 9 days in July and August 2011, and contain an entry for every HTTP request. The authors of the study found evidence of IP address blocking, DNS blocking, and HTTP request keyword blocking; and also evidence of users circumventing censorship by downloading circumvention software or using cache feature of Google search. All subdomains of .il, the top-level domain for Israel, were blocked, as were many IP address ranges in Israel. Blocked URL keywords included “proxy”, which resulted in collateral damage to the Google Toolbar and the Facebook like button because they included the string “proxy” in HTTP requests. Tor was only lightly censored: only one of several proxies blocked it, and only sporadically.

Generic measurement platforms

For a decade, the OpenNet Initiative produced reports on Internet filtering and surveillance in dozens of countries, until it ceased operation in 2014. For example, their 2005 report on Internet filtering in China [146] studied the problem from many perspectives, political, technical, and legal. They tested the extent of filtering of web sites, search engines, blogs, and email. They found a number of blocked web sites, some related to news and politics, and some on sensitive subjects such as Tibet and Taiwan. In some cases, entire domains were blocked; in others, only specific URLs within the domain were blocked. There were cases of overblocking: apparently inadvertently blocked sites that happened to share an IP address or URL keyword with an intentionally blocked site. The firewall terminated connections by injecting a TCP RST packet, then injecting a zero-sized TCP window, which would prevent any communication with the same server for a short time. Using technical tricks, the authors inferred that Chinese search engines indexed blocked sites (perhaps having a special exemption from the general firewall policy), but did not return them in search results [147]. Censorship of blogs included keyword blocking by domestic blogging services, and blocking of external domains such asblogspot.com [145]. Email filtering was done by the email providers themselves, not by an independent network firewall. Email providers seemed to implement their filtering rules independently and inconsistently: messages were blocked by some providers and not others.

Sfakianakis et al. [169] built CensMon, a system for testing web censorship using PlanetLab, a distributed network research platform. They ran the system for 14 days in 2011 across 33 countries, testing about 5,000 unique URLs. They found 193 blocked domain–country pairs, 176 of them in China. CensMon was not run on a continuing basis. Verkamp and Gupta [185] did a separate study in 11 countries, using a combination of PlanetLab nodes and the computers of volunteers. Censorship techniques varied across countries; for example, some showed overt block pages and others did not.

OONI [92] and ICLab [107] are dedicated censorship measurement platforms. Razaghpanah et al. [159]provide a comparison of the two platforms. They work by running regular network measurements from the computers of volunteers or through VPNs. UBICA [3] is another system based on volunteers running probes; its authors used it to investigate several forms of censorship in Italy, Pakistan, and South Korea.

Anderson et al. [8] used RIPE Atlas a globally distributed Internet measurement network, to examine two case studies of censorship: Turkey’s ban on social media sites in March 2014 and Russia’s blocking of certain LiveJournal blogs in March 2014. Atlas allows 4 types of measurements: ping, traceroute, DNS resolution, and TLS certificate fetching. In Turkey, they found at least six shifts in policy during two weeks of site blocking. They observed an escalation in blocking in Turkey: the authorities first poisoned DNS for twitter.com, then blocked the IP addresses of the Google public DNS servers, then finally blocked Twitter’s IP addresses directly. In Russia, they found ten unique bogus IP addresses used to poison DNS.

Pearce, Ensafi, et al. [150] made Augur, a scaled-up version of the hybrid idle scan of Ensafi et al. [61], designed for continuous, global measurement of disruptions of TCP connectivity. The basic tool is the ability to detect packet drops between two remote hosts; but expanding it to a global scale poses a number of technical challenges. Pearce et al.[151] built Iris, as system to measure DNS manipulation globally. Iris uses open resolvers and evaluates measurements against the detection metrics of consistency (answers from different locations should the same or similar) and independent verifiability (checking results against other sources of data like TLS certificates) in order to decide when they constitute manipulation.

3.2 The evaluation of circumvention systems

Evaluating the quality of circumvention systems is tricky, whether they are only proposed or actually deployed. The problem of evaluation is directly tied to threat modeling. Circumvention is judged according to how well it works under a given model; the evaluation is therefore meaningful only as far as the threat model reflects reality. Without grounding in reality, researchers risk running an imaginary arms race that evolves independently of the real one.

I took part, with Michael Carl Tschantz, Sadia Afroz, and Vern Paxson, in a meta-study [182] of how circumvention systems are evaluated by their authors and designers, and comparing those to empirically determined censor models. This kind of work is rather different than the direct evaluations of circumvention tools that have happened before, for example those done by the Berkman Center [162] and Freedom House [26] in 2011. Rather than testing tools against censors, we evaluated how closely aligned designers’ own models were to models derived from actual observations of censors.

This research was partly born out of frustration with some typical assumptions made in academic research on circumvention, which we felt placed undue emphasis on steganography and obfuscation of traffic streams, while not paying enough attention to the perhaps more important problems of proxy distribution and initial rendezvous between client and proxy. We wanted to help bridge the gap by laying out a research agenda to align the incentives of researchers with those of circumventors. This work was built on extensive surveys of circumvention tools, measurement studies, and known censorship events against Tor. Our survey included over 50 circumvention tools.

One outcome of the research is that that academic designs tended to be concerned with detection in the steady state after a connection is established (related to detection by content), while actually deployed systems cared more about how the connection is established initially (related to detection by address). Designers tend to misperceive the censor’s weighting of false positives and false negatives—assuming a whitelist rather than a blacklist, say. Real censors care greatly about the cost of running detection, and prefer cheap, passive, stateless solutions whenever possible. It is important to guard against these modes of detection before becoming too concerned with those that require sophisticated computation, packet flow blocking, or lots of state.

Chapter 4

Active probing

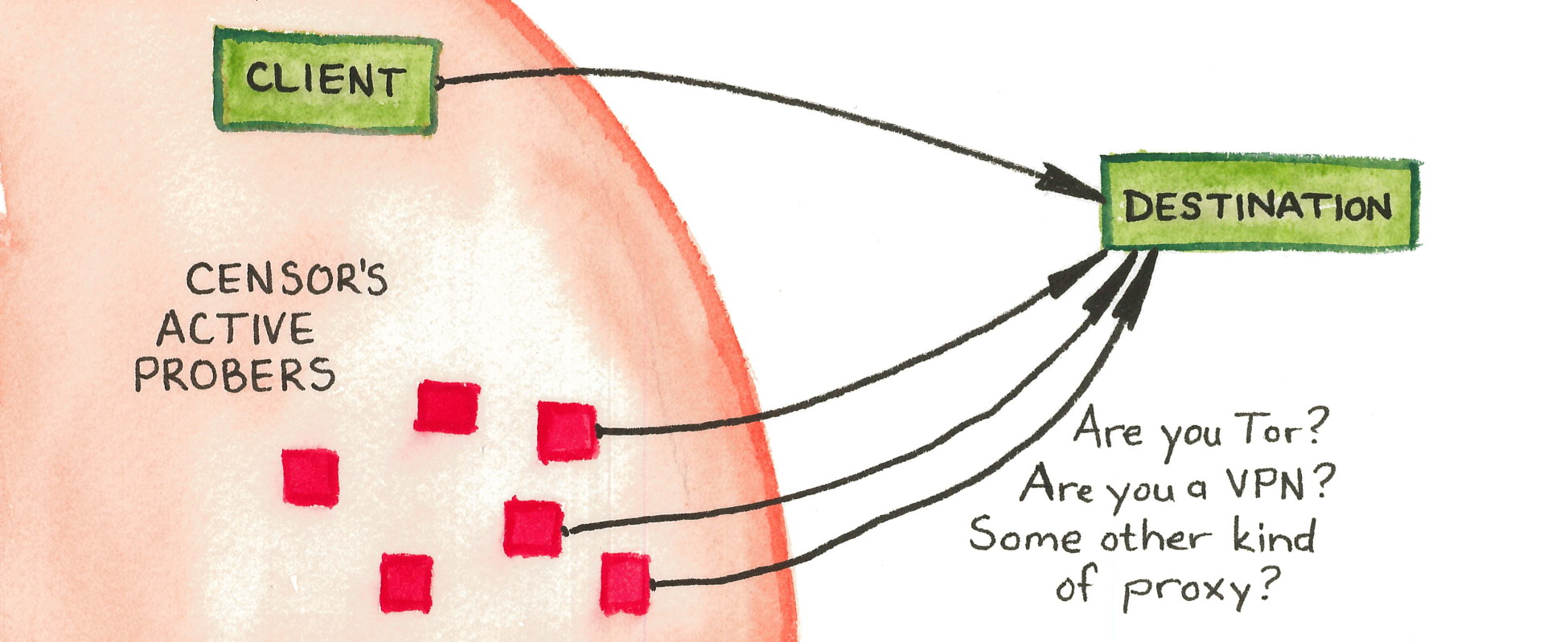

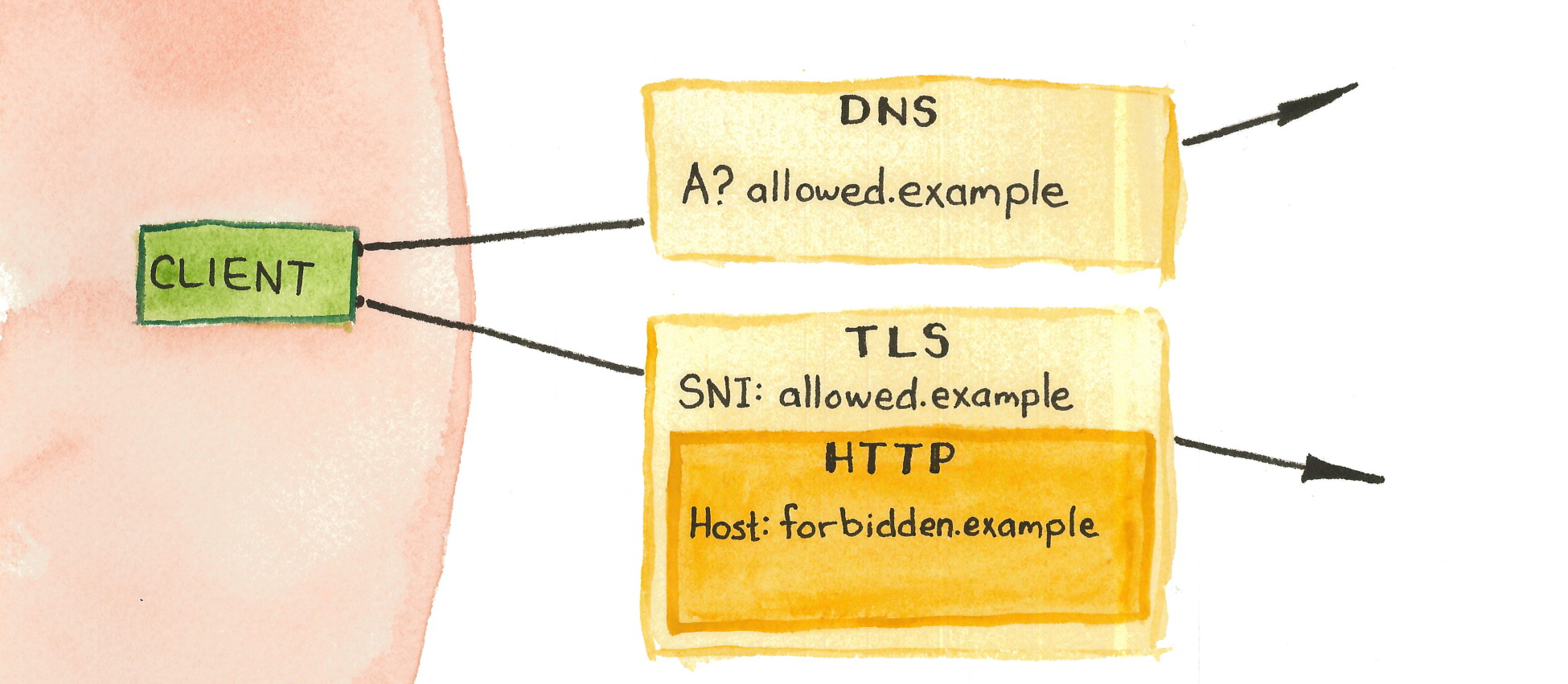

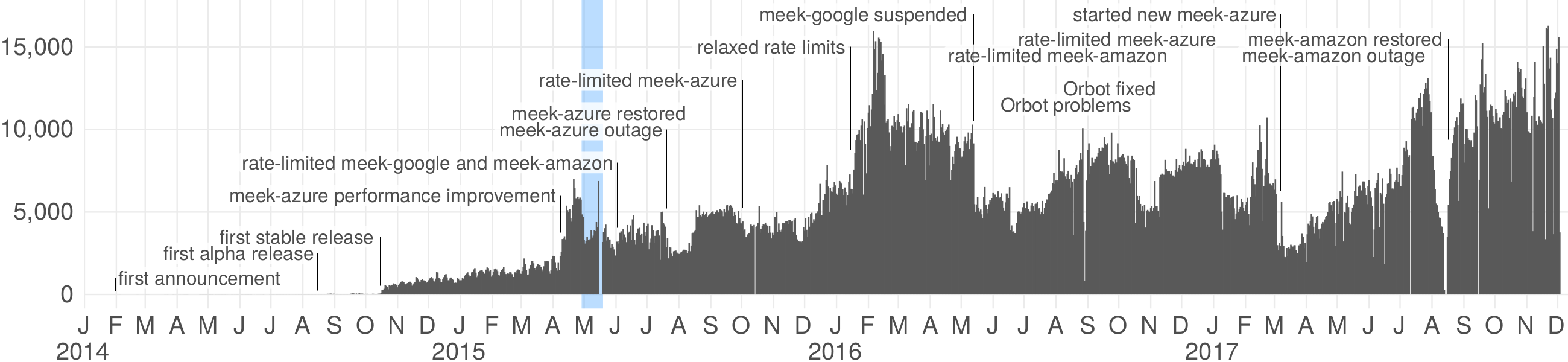

The Great Firewall of China rolled out an innovation in the identification of proxy servers around 2010:active probing of suspected proxy addresses. In active probing, the censor pretends to be a legitimate client, making its own connections to suspected addresses to see whether they speak a proxy protocol. Any addresses that are found to be proxies are added to a blacklist so that access to them will be blocked in the future. The input to the active probing subsystem, a set of suspected addresses, comes from passive observation of the content of client connections. The censor sees a client connect to a destination and tries to determine, by content inspection, what protocol is in use. When the censor’s content classifier is unsure whether the protocol is a proxy protocol, it passes the destination address to the active probing subsystem. Active prober then checks, by connecting to the destination, whether it actually is a proxy. Figure 4.1 illustrates the process.

Active probing makes good sense for the censor, whose main restriction is the risk of false-positive classifications that result in collateral damage. Any classifier based purely on passive content inspection must be very precise (have a low rate of false positives). Active probing increases precision by blocking only those servers that are determined, through active inspection, to really be proxies. The censor can get away with a mediocre content classifier—it can return a superset of the set of actual proxy connections, and active probes will weed out its false positives. A further benefit of active probing, from the censor’s point of view, is that it can run asynchronously, separate from the firewall’s other responsibilities that require a low response time.

Active probing, as I use the term in this chapter, is distinguished from other types of active scans by being reactive, driven by observation of client connections. It is distinct from proactive, wide-scale port scanning, in which a censor regularly scans likely ports across the Internet to find proxies, independent of client activity. The potential for the latter kind of scanning has been appreciated for over a decade. Dingledine and Mathewson [49 §9.3] raised scanning resistance as a consideration in the design document for Tor bridges. McLachlan and Hopper [136 §3.2] observed that the bridges’ tendency to run on a handful of popular ports would make them more discoverable in an Internet-wide scan, which they estimated would take weeks using then-current technology. Dingledine [46 §6]mentioned indiscriminate scanning as one of ten ways to discover Tor bridges—while also bringing up the possibility of reactive probing which the Great Firewall was then just beginning to use. Durumeric et al. [57 §4.4] demonstrated the effectiveness of Internet-wide scanning, targeting only two ports to discover about 80% of public Tor bridges in only a few hours, Tsyrklevich [183] and Matic et al. [133 §V.D] later showed how existing public repositories of Internet scan data could reveal bridges, without even the necessity of running one’s own scan.

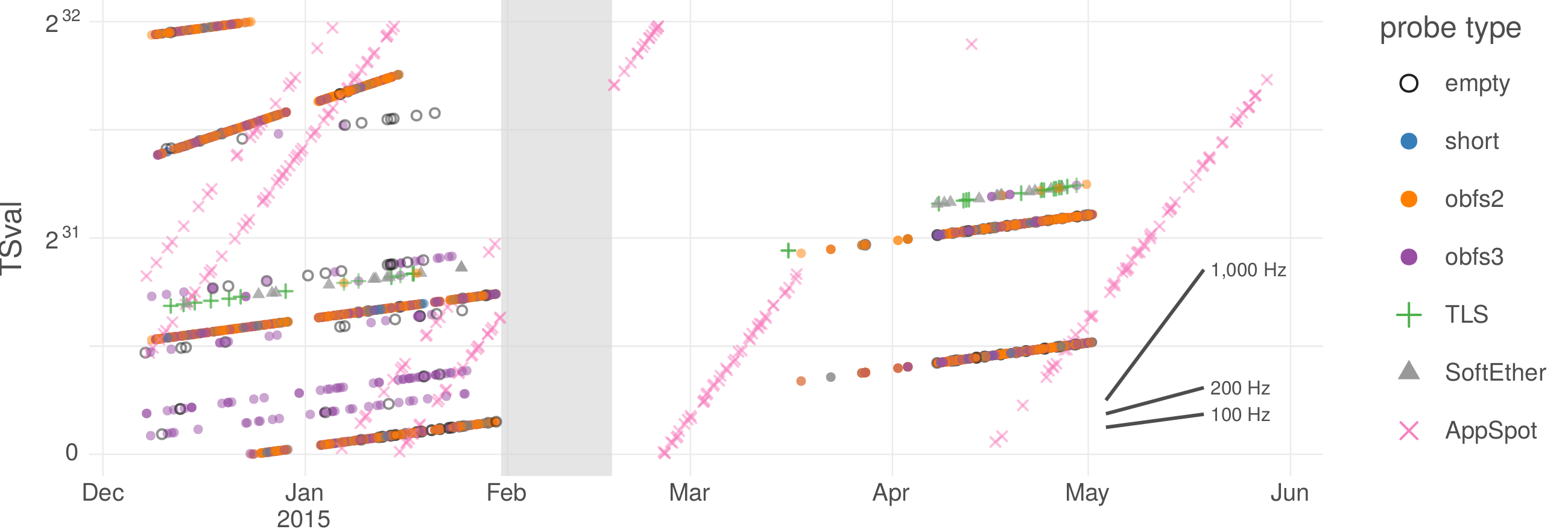

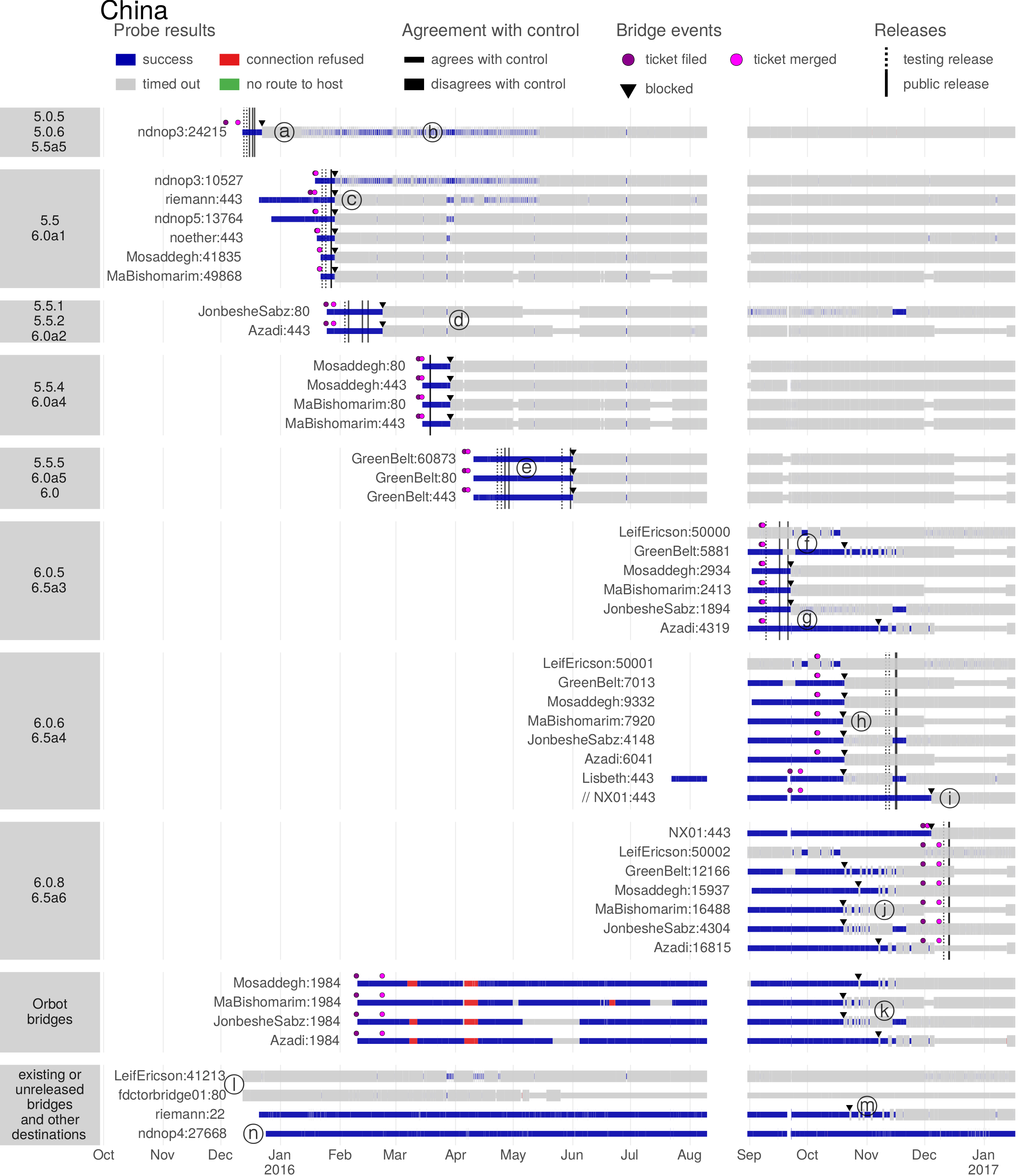

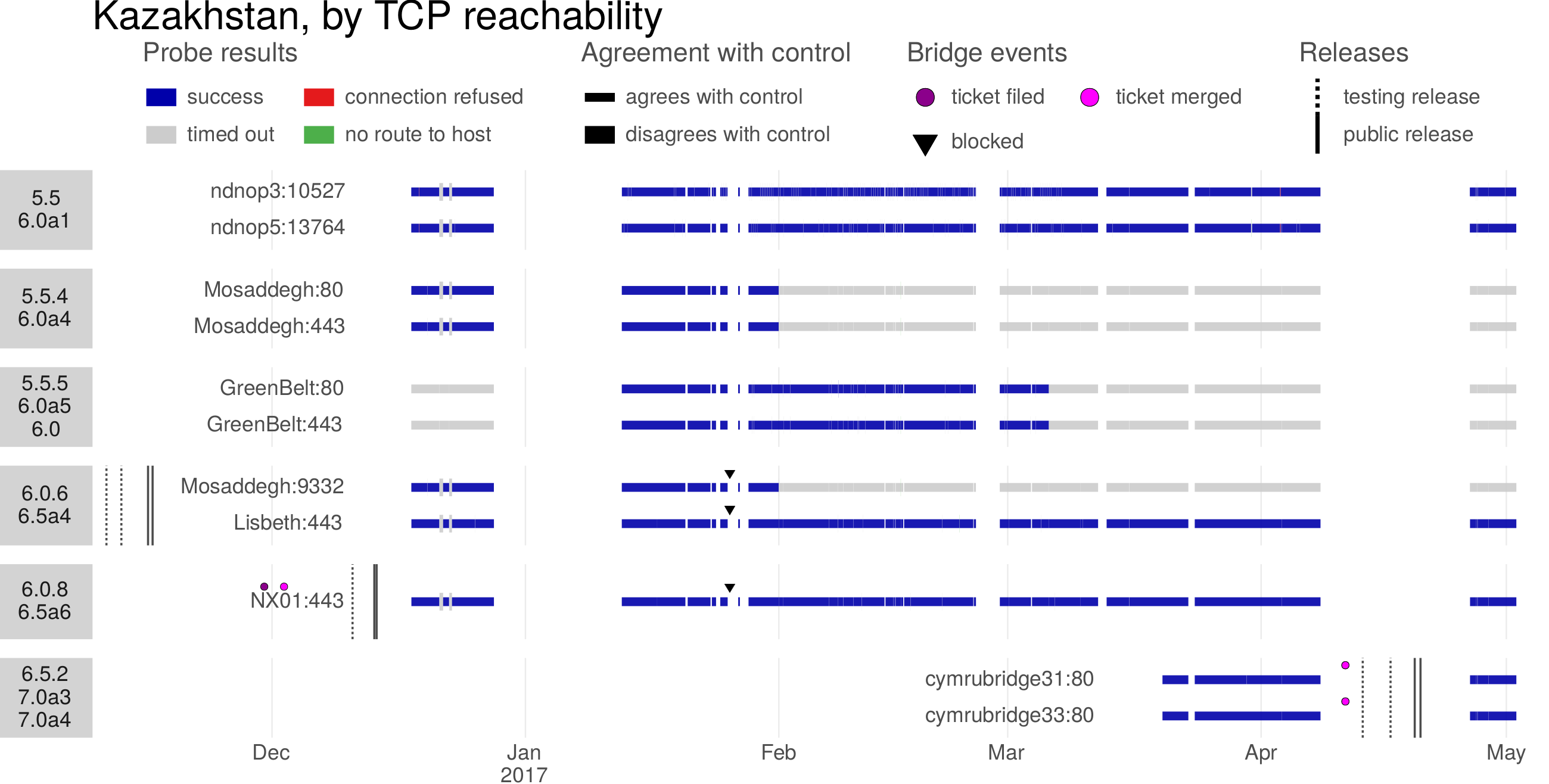

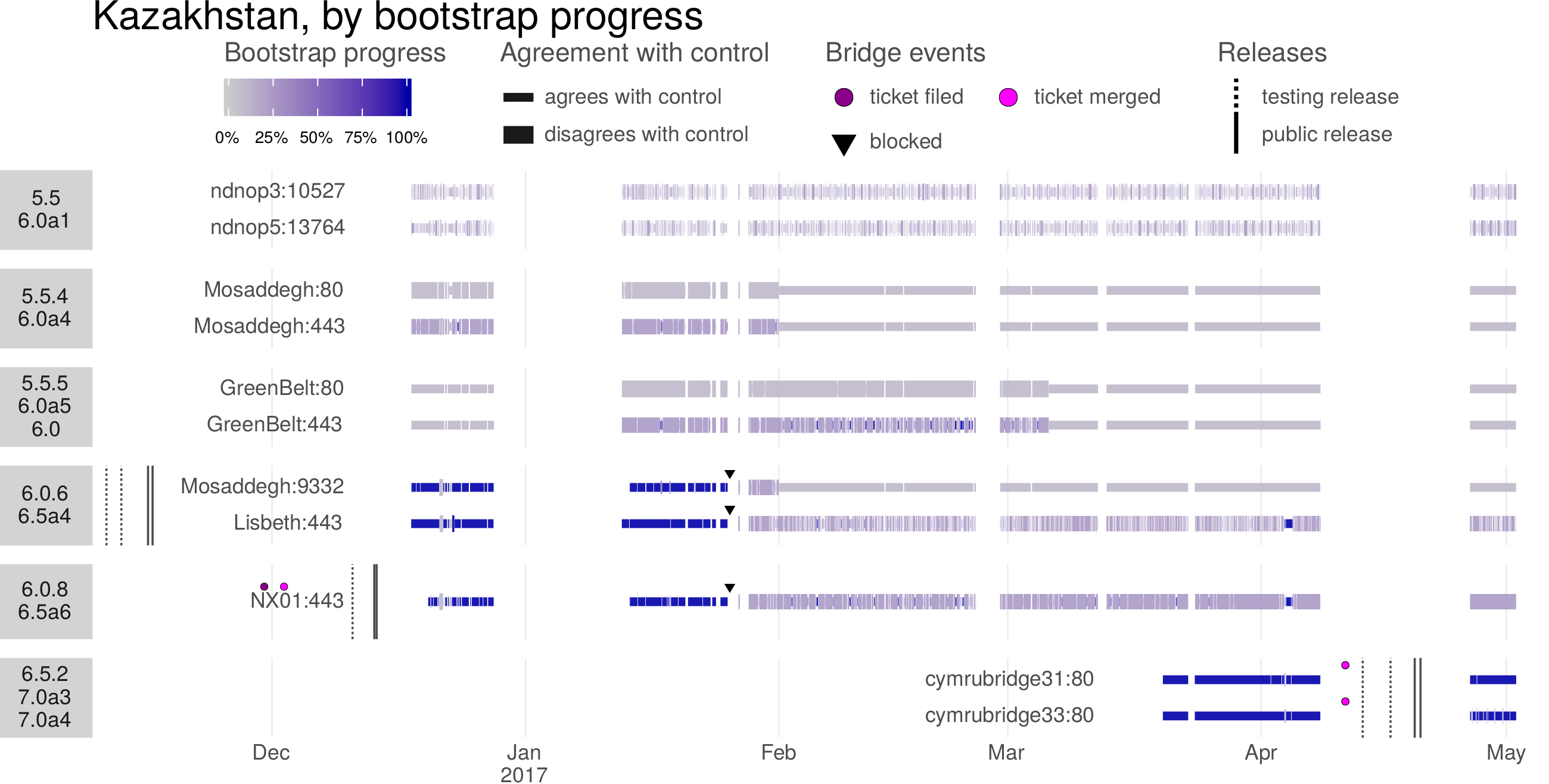

The Great Firewall of China is the only censor known to employ active probing. It has increased in sophistication over time, adding support for new protocols and reducing the delay between a client’s connection and the sending of probes. The Great Firewall has the documented ability to probe the plain Tor protocol and some of its pluggable transports, as well as certain VPN protocols and certain HTTPS-based proxies. Probing takes place only seconds or minutes after a connection by a legitimate client, and the active-probing connections come from a large range of source IP addresses. The experimental results in this chapter all have to do with China.

Active probing occupies a space somewhere in the middle of the dichotomy, put forward in Chapter 2, of detection by content and detection by address. An active probing system takes suspected addresses as input and produces to-be-blocked addresses as output. But it is content-based classification that produces the list of suspected addresses in the first place. The existence of active probing is The use of active probing is, in a sense, a good sign for circumvention: it only became relevant content obfuscation had gotten better. If a censor could easily identify the use of circumvention protocols by mere passive inspection, then it would not go to the extra trouble of active probing.

Contemporary circumvention systems must be designed to resist active probing attacks. The strategy of the look-like-nothing systems ScrambleSuit [200], obfs4 [206], and Shadowsocks [126, 156] is to authenticate clients using a per-proxy password or public key; i.e., to require some additional secret information beyond just an IP address and port number. Domain fronting (Chapter 6) deals with active probing by co-locating proxies with important web services: the censor can tell that circumvention is taking place but cannot block the proxy without unacceptable collateral damage. In Snowflake (Chapter 7), proxies are web browsers running ordinary peer-to-peer protocols, authenticated using a per-connection shared secret. Even if a censor discovers one of Snowflake’s proxies, it cannot verify that the proxy is running Snowflake or something else, without having first negotiated a shared secret through Snowflake’s broker mechanism.

4.1 History of active probing research

Active probing research has mainly focused on Tor and its pluggable transports. There is also some work on Shadowsocks. Table 4.2 summarizes the research covered in this section.

| Nixon notices unusual, random-looking connections from China in SSH logs [143]. | |

| – | Nixon’s random-looking probes are temporarily replaced by TLS probes before changing back again [143]. |

| hrimfaxi reports that Tor bridges are quickly detected by the GFW [41]. | |

| Nixon publishes observations and hypotheses about the strange SSH connections [143]. | |

| Wilde investigates Tor probing [48, 193, 194]. He finds two kinds of probe: “garbage” random probes and Tor-specific ones. | |

| The obfs2 transport becomes available [43]. The Great Firewall is initially unable to probe for it. | |

| Winter and Lindskog investigate Tor probing in detail [199]. | |

| The Great Firewall begins to active-probe obfs2 [47, 60 §4.3]. The obfs3 transport becomes available [68]. | |

| – | Majkowski observes TLS and garbage probes and identifies fingerprintable features of the probers [128]. |

| The Great Firewall begins to active-probe obfs3 [60 Figure 8]. | |