GFPGAN aims at developing Practical Algorithms for Real-world Face Restoration.

| 简体中文

- Colab Demo for GFPGAN

; (Another Colab Demo for the original paper model)

- Online demo: Huggingface (return only the cropped face)

- Online demo: Replicate.ai (may need to sign in, return the whole image)

- Online demo: Baseten.co (backed by GPU, returns the whole image)

- We provide a clean version of GFPGAN, which can run without CUDA extensions. So that it can run in Windows or on CPU mode.

🚀 Thanks for your interest in our work. You may also want to check our new updates on the tiny models for anime images and videos in Real-ESRGAN😊

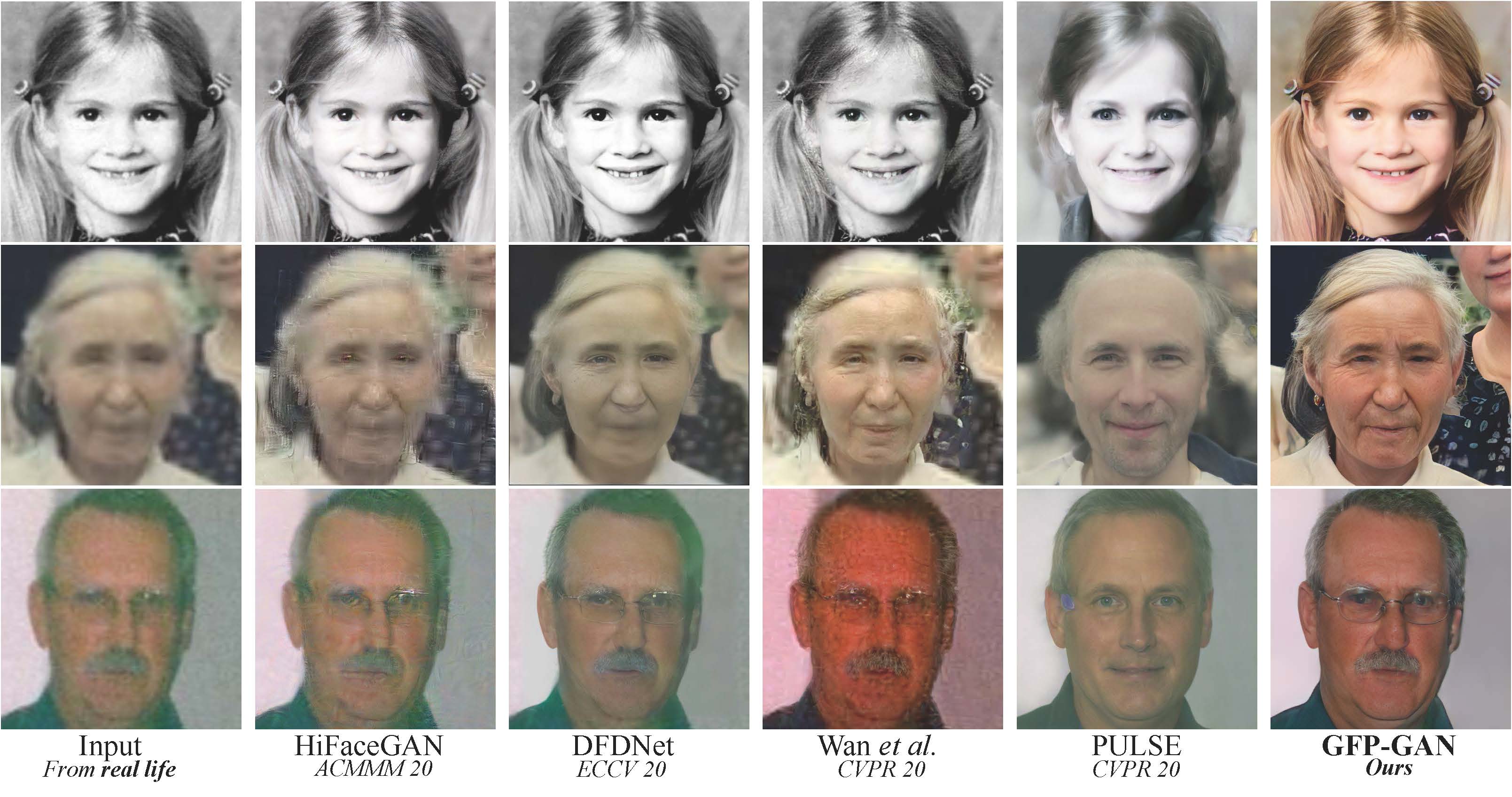

GFPGAN aims at developing a Practical Algorithm for Real-world Face Restoration.

It leverages rich and diverse priors encapsulated in a pretrained face GAN (e.g., StyleGAN2) for blind face restoration.

🔥 🔥 ✅ Add V1.3 model, which produces more natural restoration results, and better results on very low-quality / high-quality inputs. See more in Model zoo, Comparisons.md✅ Integrated to Huggingface Spaces with Gradio. See Gradio Web Demo.✅ Support enhancing non-face regions (background) with Real-ESRGAN.✅ We provide a clean version of GFPGAN, which does not require CUDA extensions.✅ We provide an updated model without colorizing faces.

If GFPGAN is helpful in your photos/projects, please help to

📖 GFP-GAN: Towards Real-World Blind Face Restoration with Generative Facial Prior

[Paper] [Project Page] [Demo]

Xintao Wang, Yu Li, Honglun Zhang, Ying Shan

Applied Research Center (ARC), Tencent PCG

🔧 Dependencies and Installation

- Python >= 3.7 (Recommend to use Anaconda or Miniconda)

- PyTorch >= 1.7

- Option: NVIDIA GPU + CUDA

- Option: Linux

Installation

We now provide a clean version of GFPGAN, which does not require customized CUDA extensions.

If you want to use the original model in our paper, please see PaperModel.md for installation.

Clone repo

git clone https://github.com/TencentARC/GFPGAN.git cd GFPGANInstall dependent packages

# Install basicsr - https://github.com/xinntao/BasicSR # We use BasicSR for both training and inference pip install basicsr # Install facexlib - https://github.com/xinntao/facexlib # We use face detection and face restoration helper in the facexlib package pip install facexlib pip install -r requirements.txt python setup.py develop # If you want to enhance the background (non-face) regions with Real-ESRGAN, # you also need to install the realesrgan package pip install realesrgan

⚡ Quick Inference

We take the v1.3 version for an example. More models can be found here.

Download pre-trained models: GFPGANv1.3.pth

wget https://github.com/TencentARC/GFPGAN/releases/download/v1.3.0/GFPGANv1.3.pth -P experiments/pretrained_modelsInference!

python inference_gfpgan.py -i inputs/whole_imgs -o results -v 1.3 -s 2Usage: python inference_gfpgan.py -i inputs/whole_imgs -o results -v 1.3 -s 2 [options]...

-h show this help

-i input Input image or folder. Default: inputs/whole_imgs

-o output Output folder. Default: results

-v version GFPGAN model version. Option: 1 | 1.2 | 1.3. Default: 1.3

-s upscale The final upsampling scale of the image. Default: 2

-bg_upsampler background upsampler. Default: realesrgan

-bg_tile Tile size for background sampler, 0 for no tile during testing. Default: 400

-suffix Suffix of the restored faces

-only_center_face Only restore the center face

-aligned Input are aligned faces

-ext Image extension. Options: auto | jpg | png, auto means using the same extension as inputs. Default: autoIf you want to use the original model in our paper, please see PaperModel.md for installation and inference.

🏰 Model Zoo

| Version | Model Name | Description |

|---|---|---|

| V1.3 | GFPGANv1.3.pth | Based on V1.2; more natural restoration results; better results on very low-quality / high-quality inputs. |

| V1.2 | GFPGANCleanv1-NoCE-C2.pth | No colorization; no CUDA extensions are required. Trained with more data with pre-processing. |

| V1 | GFPGANv1.pth | The paper model, with colorization. |

The comparisons are in Comparisons.md.

Note that V1.3 is not always better than V1.2. You may need to select different models based on your purpose and inputs.

| Version | Strengths | Weaknesses |

|---|---|---|

| V1.3 | ✓ natural outputs ✓better results on very low-quality inputs ✓ work on relatively high-quality inputs ✓ can have repeated (twice) restorations | ✗ not very sharp ✗ have a slight change on identity |

| V1.2 | ✓ sharper output ✓ with beauty makeup | ✗ some outputs are unnatural |

You can find more models (such as the discriminators) here: [Google Drive], OR [Tencent Cloud 腾讯微云]

💻 Training

We provide the training codes for GFPGAN (used in our paper).

You could improve it according to your own needs.

Tips

- More high quality faces can improve the restoration quality.

- You may need to perform some pre-processing, such as beauty makeup.

Procedures

(You can try a simple version ( options/train_gfpgan_v1_simple.yml) that does not require face component landmarks.)

Dataset preparation: FFHQ

Download pre-trained models and other data. Put them in the

experiments/pretrained_modelsfolder.Modify the configuration file

options/train_gfpgan_v1.ymlaccordingly.Training

python -m torch.distributed.launch --nproc_per_node=4 --master_port=22021 gfpgan/train.py -opt options/train_gfpgan_v1.yml --launcher pytorch

from https://github.com/TencentARC/GFPGAN

(GFPGAN

演示地址:https://replicate.com/tencentarc/gfpgan

一个老照片恢复到高清的项目,旨在开发用于真实世界的人脸恢复的实用算法,可以用来恢复老照片或改善人工智能生成的人脸,效果还可以,主要是修复人脸,对于其它地方的修复不太理想。

https://github.com/TencentARC/GFPGAN)

(GFPGAN-腾讯开源AI老照片修复工具

GFPGAN是腾讯开源的人脸修复算法,它利用预先训练好的面部GAN(例如StyleGAN2)中封装的丰富和多样的先验因素进行盲脸(blind face) 修复,旨在开发一种用于现实世界人脸恢复的实用算法。

试了一下在线的demo,修复了一下老照片,效果非常惊艳,感兴趣的同学可以试试,使用GitHub登录即可。

演示地址:https://replicate.com/tencentarc/gfpgan

GitHub:https://github.com/TencentARC/GFPGAN)

--------------------------------------------------------

图片无损放大工具,马赛克画质秒变高清大图!

一位作者利用腾讯ARC实验室最新的图像超分辨率模型制作了一款图片高清放大工具。

这款工具相较于其他的图片高清放大工具,可以说是超级简单,界面简单,操作简单。

你只需要将你要放大的图片导入软件,然后点击生成按钮,稍等片刻,即可获得放大后的高清图片(放大后的图片和原图片在同一路径)。亲自测试了一下, 效果非常明显。

是不是整个世界都清晰了呢?腾讯ARC实验室的这个图像超分辨率模型Real-ESRGAN是基于ESRGAN的改进研究,重点考虑了消除低分辩率图像中的振铃和伪影,对真实风景图片能更加恢复其细节。

感兴趣的小伙伴可以下载图片自己亲自试一试!

项目地址:https://github.com/xinntao/Real-ESRGAN

-------------------------------------------

基于腾讯 ARC 的 AI 图片无损放大工具:AI LOSSLESS ZOOMER

大家平时在网上看到喜欢的图片,希望将它作为手机/电脑壁纸,又或者作为一些设计素材,但是可能图片的像素尺寸太低,导致设置为壁纸不清晰。所以你可能会找图片无损放大的工具。

目前比较流行的图片无损放大软件例如有:waifu2x、PhotoZoom、Topaz A.I. Gigapixel 等软件。无损放大的原理,一方面是通过 AI 的学习来放大图片像素,另一种是通过软件本身的算法。

今天给大家分享网友基于腾讯 ARC Lab 的 Real-ESRGAN 模型而开发的 AI 图片无损放大软件。据作者介绍,目前这个模型主要来源人像,对人像图片放大会有一个不错的效果,特别是动漫的图片。

AI无损放大工具介绍

AI 无损放大工具已包含最新 AI 引擎,解压直接运行就能使用。系统方面支持 Windows 7 或以上系统,需要安装 net framework 4.6 框架。

在设置里面,你可以看到引擎核心和模块,如果你需要输出指定目录,也可以先设置。

接着打开你需要无损放大的图片,格式支持 PNG\JPG\BMP,支持批量图片自动无损放大,然后点击开始任务,耐心等待一会。

锋哥随便找的一张图,分辨率为 483 x 586,无损放大生成后的尺寸为 1720 x 2344 大小。通过放大对比细节,可以看到效果还是很不错的。

功能特色

支持多线程处理

支持批量图片处理

支持设置选项

支持自定义输出格式和自定义输出路径

支持AI引擎选择

支持批量清理任务

总结

对于急需无损图片放大功能的用户来说,这款工具还是不错的,使用简单方便,唯一不足就是不能手动设置图片无损放大的尺寸。但是好在作者还开源了代码,动手能力强的小伙伴可以自己二次开发、研究学习。

下载:

https://xia1ge.lanzoui.com/iGfVuutpeve

项目地址:

https://github.com/X-Lucifer/AI-Lossless-Zoomer

Real-ESRGAN:

https://github.com/xinntao/Real-ESRGAN

--------------------------------------------------------

Real ESRGAN- 一个旨在开发恢复通用图像的实用算法,可以用来对低分辨率图片完成四倍放大和修复,化腐朽为神奇.

在线体验 |中文文档 | 安卓APP

不要被算法吓到了,这是一个开箱即用的图像/视频修复程序,你可以直接在文档页面找到 Windows版 / Linux版 / macOS版的程序下载地址

项目提供了 5 个模型供你使用,你可以分别对照片、动漫插画、动漫视频等进行修复.

--------------

Real-CUGAN,B站推出的图像视频AI超分算法模型(AI放大)

随着科学技术的不断发展尤其是人工智能、云计算、机器学习等技术在图像视频领域大展身手,图像智能化处理变得越来越普及化,各种基于AI云计算、机器学习的应用层出不穷,例如国外的Topaz产品,基于AI算法可对图像、视频进行智能化降噪、锐化、放大,以进一步对老旧图像视频就行画质修复,使之重焕光彩。

除了像Topaz商业应用外,近些年开源免费的超分算法模型也屡见不鲜,例如之前阿刚给大家推荐过的Waifu2x,它是目前最火爆的图像超分算法之一。

最近,B站开发了一个专门针对二次元图像的Real-CUGAN算法模型,效果惊艳!

Real-CUGAN,B站推出的二次元图像AI超分算法模型

Real-CUGAN是一个使用百万级动漫数据进行训练的,结构与Waifu2x兼容的通用动漫图像超分辨率模型。它支持2x\3x\4x倍超分辨率,其中2倍模型支持4种降噪强度与保守修复,3倍/4倍模型支持2种降噪强度与保守修复。

什么是超分技术

先简单说下超分技术,通俗的理解,就是将图像/视频的分辨率放大,它是一种底层图像处理任务,将低分辨率的图像映射至高分辨率,以此达到增强图像细节的作用。

超分辨率重建的方法有多种,目前的主流是基于机器深度学习,即先将高分辨率图像按照降质模型进行降质,产生训练模型,根据高分辨率图像的低频部分和高频部分对应关系对图像分块,通过一定算法进行学习,获得先验知识,建立模型,最后即可应用,实现低质图像进行高清化处理。

Real-CUGAN全称Real Cascade U-Nets for Anime Image Super Resolution,其模型结构魔改自Waifu2x官方CUNe,训练代码主要参考腾讯发布的RealESRGAN。

主要优势:

百万训练集:Real-CUGAN的高清私有训练集块数量高达百万级,Waifu2x与Real-ESRGAN均为私有库,量级与质量未知

优秀的细节效果:更锐利的线条,更好的纹理保留,虚化区域保留

良好的兼容性:同Waifu2x结构相同,但参数不同,与Waifu2x无缝兼容

可调节强度:目前有4种降噪程度版本和保守版,后续将支持调节不同去模糊、去JPEG伪影、锐化、降噪强度

速度:Real-CUGAN、Waifu2x均约为Real-ESRGAN的2.2倍速度;约为通用型Real-ESRGAN模型的8.4倍速度。

官方针对纹理、线条、“渣清”以及景深四种图片特点,分别使用Waifu2x、腾讯的RealESRGAN进行处理,制作了一组最终的效果对比图,阿刚这里选了纹理和渣清对比最明显的两组图,大家可以放大看原图直观比较下:

在这种对比图中,地板纹理Real-CUGAN和Waiuf2x的处理效果大差不差,Waifu2x只有降噪,并未对线条做优化,而且它的锐利度也是最小的,两者的区别很小,腾讯的RealESRGAN纹理保留性最差,较为模糊、细节有所丢失,表现较弱。

综合比较的话,Real-CUGAN处理的最好。

没有什么比一张渣渣图片更让人一目了然了,原图是腾讯Real-ESRGAN的官方测试样例,在对比图中Real-CUGAN明显要优于Waiuf2x、RealESRGAN,画面清晰、更干净。

如何使用

Real-CUGAN的开发团队目前为Windows平台打包了一个可执行环境,在下载解压后,运行go.bat运行即可,另外在处理之前,还需修改config.py配置参数,在项目主页上,官方有专门详细说明各项参数,大家可自行参阅。

由于主要是命令行界面,有一定的上手难度,对于我们而言,最简单的当然还是使用GUI界面,方便快捷。目前Squirrel补帧团队基于RealCUGAN(PyTorch版本)与Waifu2x/RealESRGAN开发了一个图形界面程序,已经免费发布了,用起来非常顺手,阿刚下面简单说下。

Squirrel Anime Enhance,基于多个开源超分算法的中文超分软件

Squirrel Anime Enhance是著名“补帧团队”Squirrel开发一款的基于多个开源超分算法的超分软件,目前主要包括realCUGAN, realESR, waifu2x知名算法,它提供了简单易用的GUI界面,让你快速上手,轻松处理图片、视频。

主要特性:

集成了 realCUGAN, realESR, waifu2x 三种超分算法

拥有友好的 GUI 图形界面,方便使用

使用pipe传输视频帧,无需拆帧到本地,拯救硬盘

更小的显存、内存占用,更快的速度

拥有预览界面,能更好地了解超分情况

Squirrel Anime Enhance使用简单,解压后,双击启动SAE.bat即可启动软件。

1,输入图片/视频

在Squirrel Anime Enhance中,你可以导入要处理的视频与图片序列,但不支持单张图片。

如果导入的是图片序列,务必要保证文件命名规范(等长),例如input 0001.png, input 0002.png, …另外不支持交错或带有透明通道的图片

2,设置超分算法与模型

如前面所述,目前主要集成了realCUGAN, realESR,和waifu2x三种超分算法,每一种超分算法下都有相对应的超分模型,大家可以针对视频特征自行选择,简单说下:

waifu2x:

models cunet:一般用于动漫超分

models photo:一般用于实拍

models style anime:一般用于老动漫

realESR:

realESR提供了两类模型,其中:

realESRGAN模型:效果清晰,偏向于脑补;

realESRNet模型:效果模糊,偏向于涂抹

2x和4x模型速度差异并不是特别明显,但建议使用与输出分辨率最邻近的倍率模型,例如输入为270p,输出为1080p.建议使用4x模型;输入为270p,输出为540p,建议使用2x模型。

realCUGAN目前3倍4倍超分只有3个模型,2倍有4个不同降噪强度模型和1个保守模型,其中:

降噪版(Denoise):主要针对原片噪声多;

无降噪版(No-Denoise):如果原片噪声不多,压得还行,但是想提高分辨率/清晰度/做通用性的增强、修复处理,推荐使用;

保守版(conservative):如果担心丢失纹理,担心画风被改变,颜色被增强,总之就是各种担心AI会留下浓重的处理痕迹,推荐使用该版本。

在选择好合适的算法与模型后,便可以一键压制。阿刚用了一小段海贼王的,放至两倍,细节保留得当,最后的效果相当棒,

写在最后

开源的超分算法中,waifu2x功能强大,大家肯定耳熟能详了,腾讯推出的realESR实力也不容小觑,两者在动漫超分的效果上着实让人感到惊艳。

而B站这个开源的动漫超分模型,在速度、修复效果、处理痕迹上,表现更优秀,官方目前还在不断优化之中,如果你是个动漫爱好者,或者有放大视频的需要,可以试试B站realCUGAN,效果相当惊艳~

当然前提,你首先要有一台配置够强劲的电脑。

-------------------------------------------------

Real-ESRGAN GUI - AI 图片放大工具

AI 图片放大工具「Real-ESRGAN GUI」是 AI 图像修复算法 Real-ESRGAN 的开源图形界面,提供了 Windows、Linux、macOS 全平台支持,完全在本地运行,无需安装,绿色便携。

功能介绍

拖拽支持:将图片文件或目录拖拽到窗口上,即可自动设定输入和输出路径。

任意尺寸放大:根据设定的放大尺寸,多次调用 Real-ESRGAN 再进行降采样。

GIF 处理:将 GIF 逐帧放大后合并,保留动画效果。

批处理:批量放大指定目录内的所有图片。

官方地址:

https://akarin.dev/realesrgan-gui

项目地址:

https://github.com/TransparentLC/realesrgan-gui

No comments:

Post a Comment